In recent years, artificial intelligence (AI) (opens new window) has made some major transformations, especially in the domain of large language models (LLMs) (opens new window). These models, like OpenAI's (opens new window)GPT series, Google's Gemini (opens new window), and Meta's LLaMA (opens new window), have shown amazing capabilities like generating text almost similar to human writing. This makes them incredibly useful in a wide range of tasks, from writing content and translating languages to solving complex problems and improving business processes.

LLMs can help us in handling tasks, that used to require manual work in the past, which allows for faster results and opens up new opportunities for AI-powered solutions. With the increasing demand for unique AI solutions, several tools have been already developed to help developers build AI applications more easily. Two of the most popular technologies used are LangChain (opens new window) and AutoGen (opens new window). In this blog post, we'll take a closer look at these two frameworks and understand what they offer and how they are different.

# What is LangChain?

LangChain is a framework designed to facilitate the development of large language models (LLMs) based applications by enabling the creation of modular and extensible pipelines. It allows developers to integrate different components, such as prompts, memory, and external APIs, into a well-balanced application. This capability makes it particularly well-suited for building refined conversational agents, data retrieval systems, and other LLM-based applications.

# Components of LangChain

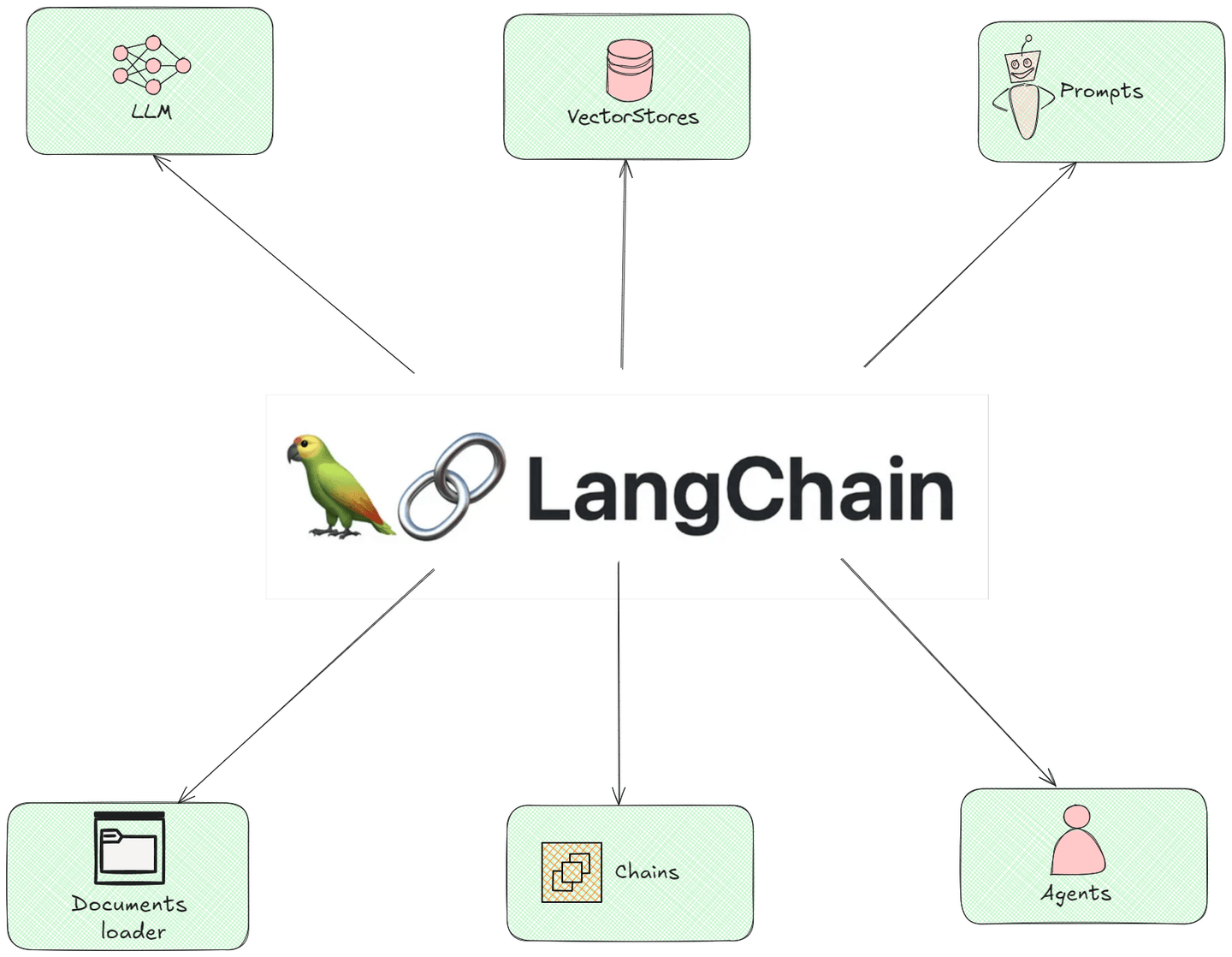

The following illustration shows all the modular components of LangChain, showcasing how they interact with each other:

- Document Loaders: This component works as a helpers that gather information from different places on the internet or from files you have. Imagine they are collecting all the ingredients you need to make a dish before you start cooking.

- LLMs (Language Models): Think of this component as smart robots that can write and respond to questions. They’ve read a lot of stuff, so they can give you good answers based on what they’ve learned.

- Chains: The chains component is like a series of steps you follow to get something done. For example, if you're baking a cake, you follow steps like mixing ingredients, pouring batter, and then baking. Chains guide how information moves through different steps to get to the final answer.

- Prompts: These are the questions or requests you give to the language model. It’s like asking a friend for help—if you ask the right question, you’ll get the right answer!

- Agents: Imagine agents is like having a personal assistant who can do tasks for you. Agents are similar; they can take action based on what you tell them, like making reservations or sending reminders, all on their own.

- Vectorstores: Vectorestores component are special places to store information so it’s easy to find when you need it. Think of them as organized folders on your computer where you can quickly look for a document without digging through a messy desktop.

# Coding Example: Creating a LangChain Agent

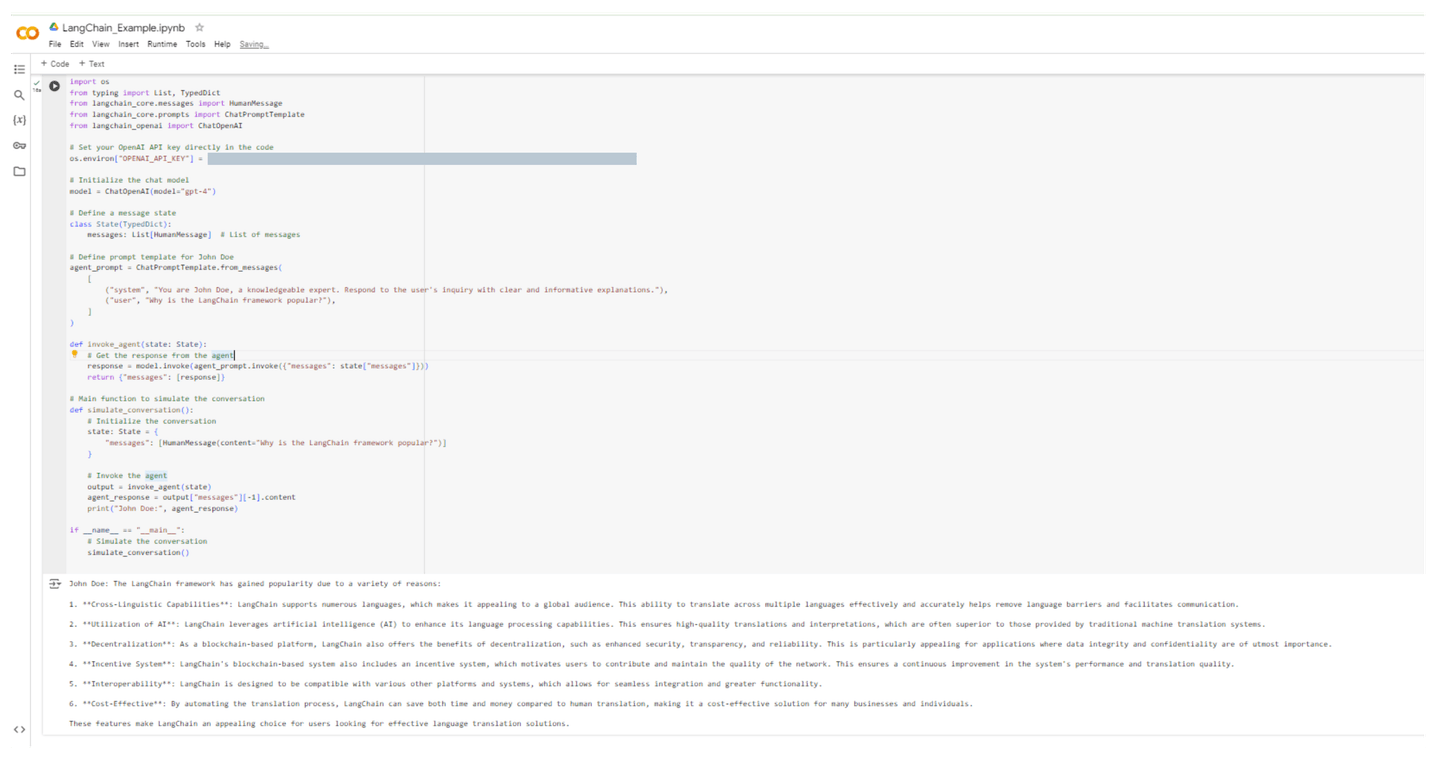

In the following example, we created a LangChain agent named John Doe, who will explain “Why is the LangChain framework popular?” The code is shown as:

import os

from typing import List, TypedDict

from langchain_core.messages import HumanMessage

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

# Set your OpenAI API key directly in the code

os.environ["OPENAI_API_KEY"] = "[OPENAI API KEY]"

# Initialize the chat model

model = ChatOpenAI(model="gpt-4")

# Define a message state

class State(TypedDict):

messages: List[HumanMessage] # List of messages

# Define prompt template for John Doe

agent_prompt = ChatPromptTemplate.from_messages(

[

("system", "You are John Doe, a knowledgeable expert. Respond to the user's inquiry with clear and informative explanations."),

("user", "Why is the LangChain framework popular?"),

]

)

def invoke_agent(state: State):

# Get the response from the agent

response = model.invoke(agent_prompt.invoke({"messages": state["messages"]}))

return {"messages": [response]}

# Main function to simulate the conversation

def simulate_conversation():

# Initialize the conversation

state: State = {

"messages": [HumanMessage(content="Why is the LangChain framework popular?")]

}

# Invoke the agent

output = invoke_agent(state)

agent_response = output["messages"][-1].content

print("John Doe:", agent_response)

if __name__ == "__main__":

# Simulate the conversation

simulate_conversation()

After executing this code, let’s see John Doe’s answer:

John Doe: The LangChain framework has gained popularity due to a variety of reasons:

1. **Cross-Linguistic Capabilities**: LangChain supports numerous languages, which makes it appealing to a global audience. This ability to translate across multiple languages effectively and accurately helps remove language barriers and facilitates communication.

2. **Utilization of AI**: LangChain leverages artificial intelligence (AI) to enhance its language processing capabilities. This ensures high-quality translations and interpretations, which are often superior to those provided by traditional machine translation systems.

3. **Decentralization**: As a blockchain-based platform, LangChain also offers the benefits of decentralization, such as enhanced security, transparency, and reliability. This is particularly appealing for applications where data integrity and confidentiality are of utmost importance.

4. **Incentive System**: LangChain's blockchain-based system also includes an incentive system, which motivates users to contribute and maintain the quality of the network. This ensures a continuous improvement in the system's performance and translation quality.

5. **Interoperability**: LangChain is designed to be compatible with various other platforms and systems, which allows for seamless integration and greater functionality.

6. **Cost-Effective**: By automating the translation process, LangChain can save both time and money compared to human translation, making it a cost-effective solution for many businesses and individuals.

These features make LangChain an appealing choice for users looking for effective language translation solutions.

The following screenshot illustrates the execution of this code in a Colab environment:

Now that we have a clear understanding of LangChain, let's move on to our next framework.

# What is AutoGen?

AutoGen is a framework that is more focused on automating the code generation processes and workflow management using large language models. Its primary goal is to speed up the development process by enabling users to generate functional code and automate repetitive tasks through natural language input. AutoGen is ideal for rapid prototyping, allowing users to create applications and workflows without extensive programming knowledge.

# Components of AutoGen

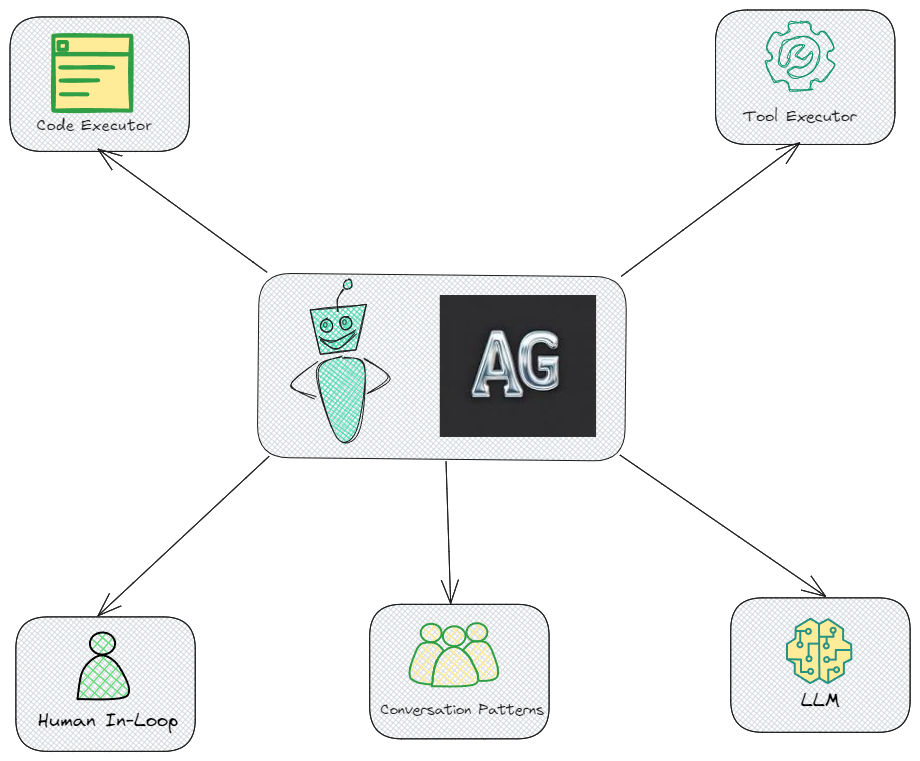

The following illustration shows all the key components of AutoGen, showcasing how they interact with each other:

- Code Executor - This component allows AutoGen to run code automatically. This is indeed a core function that lets agents execute code based on the goals set.

- Tool Executor - This component is essential for AutoGen as well, allowing the system to interact with various external tools or APIs.

- Human In-Loop - This is important for cases where human intervention is required for decision-making or validation, ensuring that human operators can guide or control the agents when needed.

- Conversation Patterns - This suggests that AutoGen incorporates predefined conversation structures to manage agent interactions effectively. It's an essential element for ensuring coherent and goal-directed dialogue among agents.

- LLM (Large Language Model) - LLMs are indeed a key part of AutoGen's architecture, providing the underlying language capabilities that drive agent understanding and decision-making.

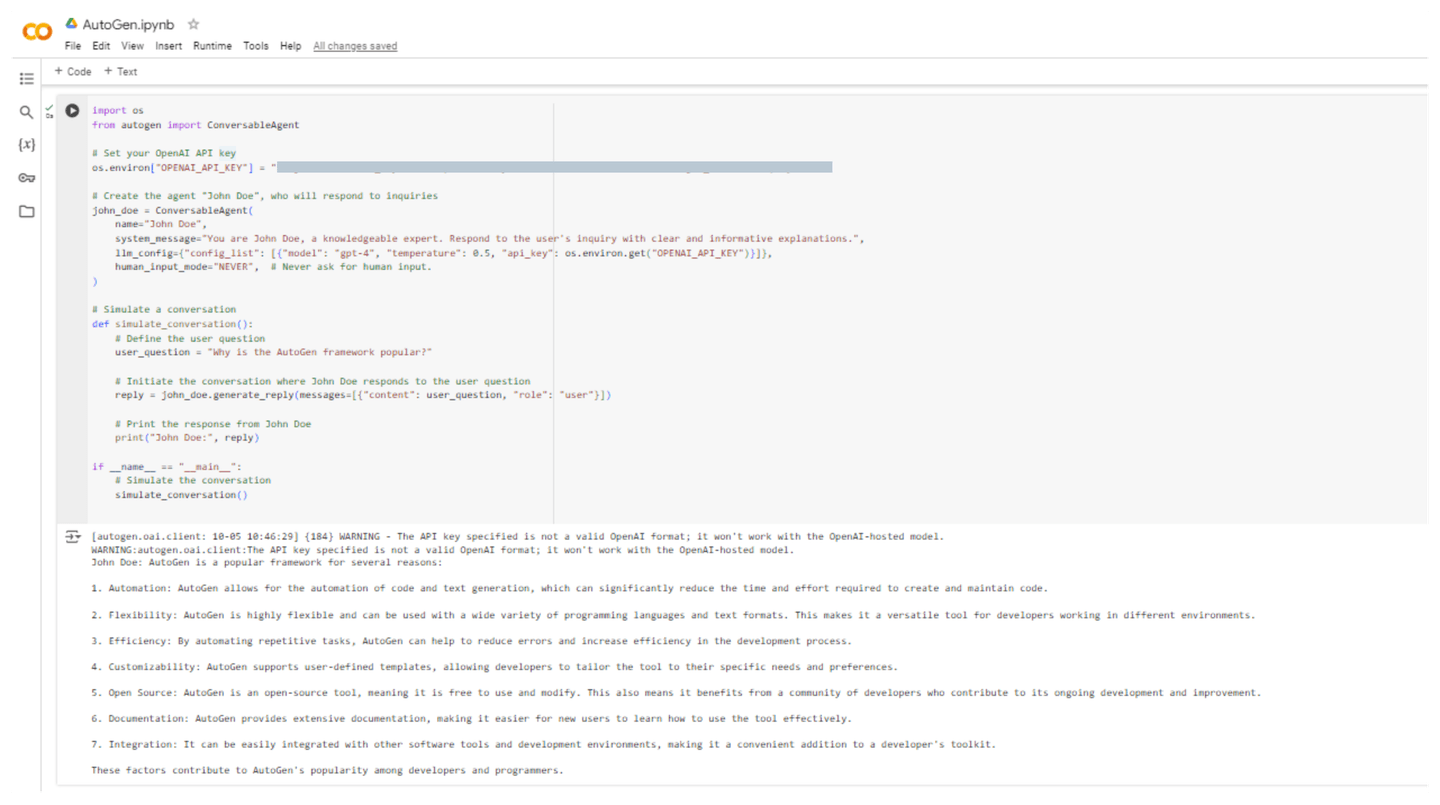

# Coding Example: Creating a AutoGen Agent

Now let’s create the same John Doe agent in AutoGen, who will explain “Why is the AutoGen framework popular?” The code is shown as:

import os

from autogen import ConversableAgent

# Set your OpenAI API key

os.environ["OPENAI_API_KEY"] = "[OPENAI API KEY]"

# Create the agent "John Doe", who will respond to inquiries

john_doe = ConversableAgent(

name="John Doe",

system_message="You are John Doe, a knowledgeable expert. Respond to the user's inquiry with clear and informative explanations.",

llm_config={"config_list": [{"model": "gpt-4", "temperature": 0.5, "api_key": os.environ.get("OPENAI_API_KEY")}]},

human_input_mode="NEVER", # Never ask for human input.

)

# Simulate a conversation

def simulate_conversation():

# Define the user question

user_question = "Why is the AutoGen framework popular?"

# Initiate the conversation where John Doe responds to the user question

reply = john_doe.generate_reply(messages=[{"content": user_question, "role": "user"}])

# Print the response from John Doe

print("John Doe:", reply)

if __name__ == "__main__":

# Simulate the conversation

simulate_conversation()

After executing this code, John Doe gives answers with the following explanation:

John Doe: AutoGen is a popular framework for several reasons:

1. Automation: AutoGen allows for the automation of code and text generation, which can significantly reduce the time and effort required to create and maintain code.

2. Flexibility: AutoGen is highly flexible and can be used with a wide variety of programming languages and text formats. This makes it a versatile tool for developers working in different environments.

3. Efficiency: By automating repetitive tasks, AutoGen can help to reduce errors and increase efficiency in the development process.

4. Customizability: AutoGen supports user-defined templates, allowing developers to tailor the tool to their specific needs and preferences.

5. Open Source: AutoGen is an open-source tool, meaning it is free to use and modify. This also means it benefits from a community of developers who contribute to its ongoing development and improvement.

6. Documentation: AutoGen provides extensive documentation, making it easier for new users to learn how to use the tool effectively.

7. Integration: It can be easily integrated with other software tools and development environments, making it a convenient addition to a developer's toolkit.

These factors contribute to AutoGen's popularity among developers and programmers.

The following screenshot illustrates the execution of this code in a Colab environment:

# LangChain vs. AutoGen: A Comparative Overview

Here's a comparison table outlining key differences and similarities between LangChain and AutoGen. This table will help you understand the unique features, strengths, and intended use cases for each framework.

| Feature / Aspect | LangChain | AutoGen |

|---|---|---|

| Purpose | Framework for developing applications using language models, focusing on chaining operations and data flow. | Framework for enabling complex workflows using multiple agents that can communicate and collaborate. |

| Agent Model | Supports building custom agents and workflows. | Uses conversational agents that can send and receive messages, simplifying multi-agent interactions. |

| Community | Established community with extensive documentation and examples. | Growing community with active development and research focus. |

| Complex Workflows | Can build complex workflows but primarily through chaining operations. | Designed for complex workflows with multiple agents, facilitating interaction between them. |

| Ease of Use | Straightforward for those familiar with LLMs, but might have a learning curve for beginners. | User-friendly for building multi-agent systems, focusing on collaboration and communication. |

| Use Cases | Ideal for applications in data processing, content generation, and conversational agents. | Suitable for applications requiring multi-agent systems, such as debate, customer support, or collaborative problem-solving. |

| Integration | Integrates well with various LLMs, tools, and external services. | Specifically designed for integration of agents and their components, including human input. |

| Human-in-the-loop | Limited support for human involvement; focuses more on automation. | Supports human-in-the-loop scenarios, allowing agents to seek human input when necessary. |

| API Usage | Requires an API key for LLMs like OpenAI, but less focus on interactions between agents. | Requires an API key for LLMs, specifically designed for agent interactions and workflows. |

| Supported Models | Works with a variety of models, including GPT-3, GPT-4, and others. | Primarily integrates with OpenAI models but can be extended to support other models as well. |

This table provides a quick overview of the strengths and considerations for both LangChain and AutoGen, helping you decide which tool best suits your project's needs.

# Conclusion

LangChain and AutoGen are robust frameworks designed for various purposes within the field of artificial intelligence. LangChain specializes in creating organized applications that make use of language models using modular components and workflow chaining. This makes it perfect for tasks such as data processing and generating content. On the other hand, AutoGen stands out in developing interactive systems with multiple agents that can work together and communicate efficiently to address difficult tasks, making it easier for humans to be involved in solving problems.

By combining MyScale (opens new window) with both frameworks, the functionality is greatly improved as it allows for the effective storage and retrieval of vector embeddings. This integration enables quick retrieval of pertinent information, enhancing the appropriateness of context and accuracy of responses in applications. Moreover, the MSTG algorithm (opens new window) from MyScale improves search and retrieval operations for efficient handling of extensive datasets. Collaboratively, these tools allow developers to build strong and efficient AI-based platforms.