Diffusion models (opens new window) are revolutionizing the field of image generation (opens new window) by enabling the creation of diverse and high-quality visual content. These models, through a unique process of adding and removing noise, can generate images that are both realistic and artistic. By understanding how to use diffusion effectively, creators can explore endless possibilities in generating new and innovative visuals. In this blog, we will delve into the intricacies of diffusion models, their applications in various domains, and provide practical insights on leveraging them for image generation.

# Understanding Diffusion Models

What are Diffusion Models?

Basic Concept

Diffusion models operate by gradually transforming digital 'noise' into coherent and detailed content, enabling the creation of diverse visual outputs. The process involves iteratively adding Gaussian noise to an image and then learning to remove it through a reverse diffusion process.

Forward and Reverse Diffusion Process (opens new window)

In the forward diffusion process, diffusion models add noise to clear images, creating a path for creativity. This noise is then reversed in the backward diffusion process, resulting in the generation of high-quality images with intricate details.

How Diffusion Models Work

Adding and Removing Noise

One key aspect of diffusion models is their ability to add controlled noise to images systematically. By understanding this mechanism, creators can manipulate the level of noise added, influencing the final output's uniqueness.

Role of the Encoder (opens new window)

The Encoder plays a crucial role in diffusion models, as it encodes full-size images into lower-dimensional representations before decoding them back to their original form. This process aids in preserving image quality while reducing data dimensions efficiently.

Advantages of Diffusion Models

High-Quality Image Generation (opens new window)

Through their unique processes, diffusion models excel in generating high-quality images that exhibit both realism and creativity. This feature makes them invaluable tools for artists and designers seeking innovative visual solutions.

Versatility in Applications

The versatility of diffusion models extends beyond image generation, offering solutions for various applications such as text-to-video synthesis and image-to-image translation. Their adaptability makes them indispensable across different creative fields.

# Applications of Diffusion Models

# Image Generation

Artistic Creations

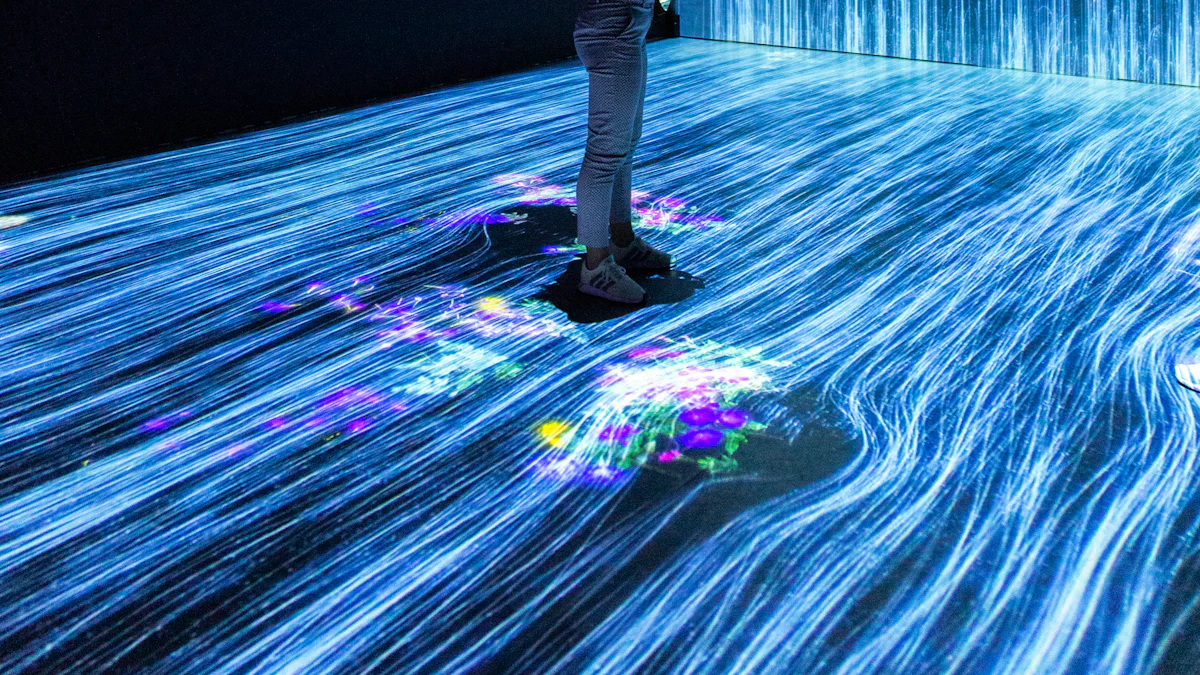

Diffusion models have revolutionized the realm of artistic creations by offering a unique approach to generating visually stunning and diverse artworks. Artists and designers can explore new horizons of creativity by leveraging the power of diffusion models to produce captivating pieces that blend realism with imagination. The controlled noise addition process in diffusion models allows for the creation of intricate and expressive art forms that push the boundaries of traditional artistic techniques.

Realistic Image Synthesis

In the domain of realistic image synthesis, diffusion models play a pivotal role in producing lifelike visual content that mirrors reality with remarkable accuracy. By simulating the gradual transformation of noise into coherent images, these models enable the generation of photorealistic scenes (opens new window) and visuals that are indistinguishable from real photographs. Through their advanced algorithms and innovative processes, diffusion models set a new standard for creating hyper-realistic imagery in various industries, from entertainment to advertising.

# Image Search

Content-Based Image Retrieval (opens new window)

The application of diffusion models in content-based image retrieval has transformed how users search for visual information online. By utilizing the reverse diffusion process, these models can accurately match query images with similar content in large databases, enabling efficient and precise image retrieval (opens new window). Content-based image retrieval powered by diffusion models enhances user experience by providing relevant search results based on visual similarities rather than text-based queries.

Reverse Image Search (opens new window)

Another groundbreaking application of diffusion models is reverse image search, which allows users to identify or find similar images based on an input image. This technology leverages the power of diffusion models to analyze visual patterns and features, enabling users to discover related images across various platforms and sources. Reverse image search powered by diffusion models has significant implications for e-commerce, digital forensics, and creative industries seeking efficient ways to locate relevant visual content.

# Other Applications

Text-to-Video Synthesis

The integration of diffusion models in text-to-video synthesis has opened up new possibilities for creating dynamic and engaging multimedia content. By translating textual descriptions into vibrant video sequences, these models enable storytellers, filmmakers, and content creators to bring narratives to life through visually compelling animations. Text-to-video synthesis powered by diffusion models offers a seamless workflow for transforming written ideas into captivating audiovisual experiences.

Image-to-Image Translation

Innovative applications like image-to-image translation leverage diffusion models to facilitate seamless transformations between different visual domains. Whether converting sketches into realistic images or altering artistic styles, these models excel at preserving key features while enhancing visual aesthetics. Image-to-image translation powered by diffusion models empowers artists, designers, and researchers to explore diverse creative possibilities and streamline complex design processes.

# How to Use Diffusion Models (opens new window)

# Getting Started

Required Tools and Software

To embark on the journey of utilizing diffusion models effectively, creators must equip themselves with essential tools and software. These include robust computational resources capable of handling complex algorithms and large datasets. Additionally, specialized software packages like TensorFlow (opens new window) or PyTorch (opens new window) are instrumental in implementing diffusion models seamlessly. By leveraging these tools, creators can delve into the realm of image generation with confidence and precision.

Basic Steps

The process of employing diffusion models for image generation entails a series of fundamental steps that pave the way for innovative visual creations. Creators begin by acquiring a clear understanding of the dataset they intend to work with, identifying key patterns and features that will influence the model's output. Subsequently, they preprocess the data to ensure optimal performance, removing any inconsistencies or noise that may hinder the model's learning process. Through meticulous preparation and strategic planning, creators lay a solid foundation for harnessing the full potential of diffusion models in generating captivating images.

# Practical Tips

Best Practices

Incorporating best practices is essential when utilizing diffusion models, ensuring optimal performance and output quality. Creators should prioritize thorough experimentation and fine-tuning to enhance the model's ability to generate diverse and realistic images. Regularly updating the model with new data and adjusting parameters based on feedback play a crucial role in refining its capabilities over time. By adhering to best practices consistently, creators can elevate their image generation endeavors to new heights of creativity and innovation.

Common Pitfalls

While navigating the intricacies of diffusion models, creators may encounter common pitfalls that can impede their progress. One such challenge is overfitting, where the model performs exceptionally well on training data but struggles with unseen examples. To mitigate this risk, creators should implement regularization techniques and validation strategies to maintain a balance between performance and generalization. Additionally, optimizing computational resources and monitoring model convergence are vital aspects in overcoming potential hurdles along the image generation journey.

AI Scientists and Researchers continue to witness the dynamic evolution of diffusion models (opens new window), with breakthroughs emerging regularly. This progress fuels further exploration and research opportunities, unveiling new horizons for innovation and discovery. Businesses leverage diffusion models to comprehend consumer preferences (opens new window), product adoption trends, and market dynamics, enabling informed decisions that enhance investment returns and foster sustainable growth.