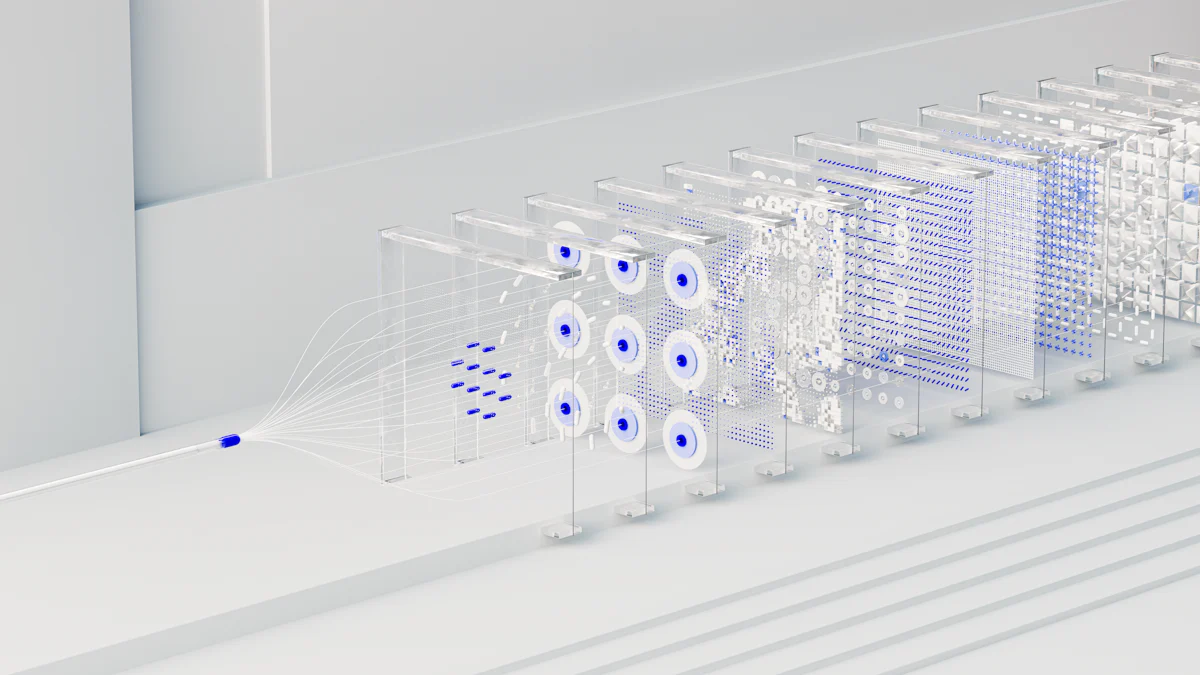

The KNN algorithm (opens new window) stands as a cornerstone in the realm of machine learning (opens new window), revered for its simplicity and effectiveness. Its significance lies in its ability to classify data points (opens new window) based on their proximity to others, making it a go-to choice for beginners delving into this field. This blog aims to unravel the complexities of KNN algorithm for novices, offering a clear path to understanding its applications and nuances.

# Understanding KNN Algorithm

KNN algorithm is a fundamental tool in machine learning, known for its simplicity and effectiveness (opens new window). When exploring KNN, it's essential to grasp its core concepts.

# What is KNN?

To understand KNN algorithm, one must first comprehend its definition and basic principles. The algorithm operates by classifying data points based on their proximity to others, making it a versatile choice for various applications (opens new window).

# Definition

The essence of KNN lies in its simplicity and efficiency. By identifying the closest neighbors to a data point, the algorithm determines its classification (opens new window) or value based on the majority vote or average of these neighbors.

# Basic principles

One key principle of KNN is that it does not assume any underlying data structure, except for calculating consistent distance metrics (opens new window) between instances. This flexibility makes it suitable for diverse datasets and problem domains.

# How KNN Works

In practice, KNN algorithm relies on distance metrics (opens new window) and selecting an appropriate value for K to make accurate predictions or classifications.

# Distance metrics

Choosing the right distance metric, such as Euclidean distance (opens new window), is crucial for determining proximity between data points accurately.

# Choosing the value of K

Selecting an optimal value for K involves balancing bias and variance (opens new window) in the model. A smaller K may lead to overfitting, while a larger K could introduce underfitting issues.

# KNN in Practice

Applying KNN algorithm involves following a step-by-step process and understanding its implications through real-world scenarios.

# Step-by-step process

By identifying nearest neighbors and aggregating their information, KNN predicts outcomes effectively without complex mathematical models.

# Example scenario

Imagine using KNN for image recognition (opens new window): by comparing pixel values with neighboring images, the algorithm can classify new images accurately.

# Applications of KNN in Machine Learning

# Classification Problems

Image recognition and text categorization (opens new window) are two prominent areas where KNN algorithm showcases its prowess in machine learning applications.

# Image recognition

In the realm of image recognition, KNN algorithm plays a vital role in classifying images based on their similarities to known datasets. By comparing pixel values and patterns, KNN swiftly identifies the closest matches, enabling accurate image categorization without the need for complex feature extraction techniques (opens new window).

# Text categorization

Text categorization, another domain benefiting from KNN, involves assigning textual data into predefined categories. Through analyzing the proximity of text samples in a high-dimensional space, KNN algorithm efficiently groups similar texts together. This approach simplifies the process of organizing and classifying vast amounts of textual information.

# Regression Problems (opens new window)

Beyond classification tasks, KNN algorithm also excels in handling regression problems by predicting continuous values with remarkable accuracy.

# Predicting continuous values

In regression scenarios, where the goal is to estimate numerical outcomes, KNN algorithm shines by leveraging the collective knowledge of neighboring data points to predict continuous values. This predictive power makes it a valuable tool in various fields such as finance, healthcare, and marketing.

# Practical examples

To illustrate the practicality of KNN in regression problems, consider a scenario where historical sales data is utilized to forecast future sales figures. By identifying similar patterns and trends among past sales records, KNN algorithm can generate reliable predictions for upcoming sales performance.

# Advantages and Limitations

# Advantages of KNN

Simplicity

KNN algorithm is celebrated for its straightforward approach, making it accessible even to beginners in the field of machine learning.

The simplicity of KNN lies in its intuitive methodology, where predictions are based on the closest neighbors to a data point.

Versatility

KNN algorithm showcases remarkable adaptability across various domains, from image recognition to regression problems.

Its versatility allows for seamless integration into different applications without the need for complex model adjustments.

# Limitations of KNN

Computational cost

As the volume of data increases (opens new window), KNN algorithm tends to become significantly slower due to its reliance on proximity calculations.

In environments where rapid predictions are crucial, the computational demands of KNN may hinder real-time decision-making processes.

Sensitivity to irrelevant features

KNN algorithm, while powerful in its simplicity, can be sensitive to irrelevant features that do not contribute meaningfully to the classification or prediction process.

This sensitivity highlights the importance of feature selection and preprocessing steps to enhance the accuracy and efficiency of KNN models.

Recapping the KNN algorithm reveals its essence in machine learning. Its applications span diverse domains, from image recognition to regression tasks, showcasing its adaptability and effectiveness. Despite its computational cost and sensitivity to irrelevant features, KNN remains a valuable tool for predictive analysis. Encouraging further exploration in machine learning opens doors to endless possibilities for aspiring data enthusiasts.