Feature scaling (opens new window) is a fundamental concept in machine learning that involves transforming numerical feature values to a comparable range. This process plays a crucial role in enhancing algorithm performance and ensuring fair model predictions (opens new window). By standardizing the independent features, feature scaling prevents numerical instability and biases (opens new window) in supervised learning models (opens new window). In this blog, we will delve into the significance of feature scaling, its impact on the nearest neighbor algorithm (opens new window), and explore various techniques to optimize model accuracy.

# Importance of Feature Scaling

In data preprocessing (opens new window), ensuring equal contribution among features is paramount. By standardizing feature values, each attribute plays a fair role in the model's decision-making process. This prevents any single feature from overshadowing others and maintains a balanced influence on the final outcome.

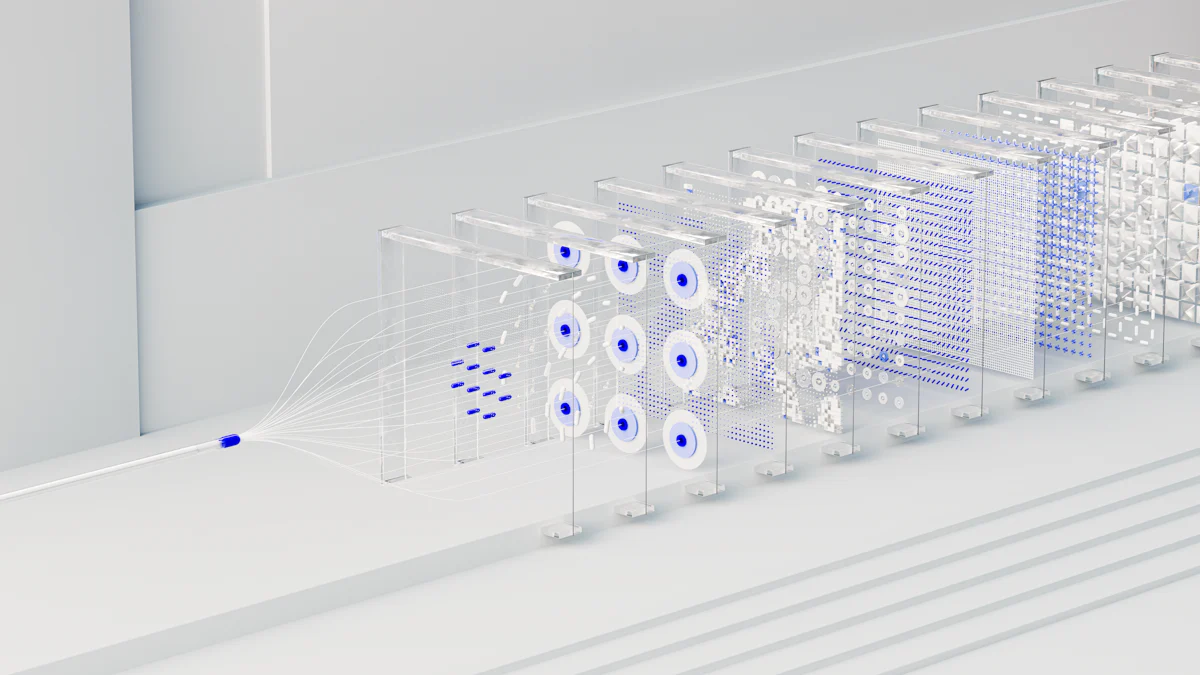

When it comes to the nearest neighbor algorithm, feature scaling significantly impacts distance calculations and classification decisions. Improving distance calculations through scaling helps the algorithm identify closer neighbors accurately. Consequently, this enhancement leads to more precise classification outcomes, ultimately refining the model's predictive capabilities.

Scaling features not only improves accuracy (opens new window) but also aids in convergence speed. Models trained on scaled data tend to converge faster (opens new window) than those without scaling, showcasing the importance of this preprocessing step in model training. Additionally, feature scaling standardizes values (opens new window) across different attributes, ensuring equitable performance across all features.

# Techniques for Feature Scaling

When considering feature scaling techniques in machine learning, it's essential to evaluate the distinct advantages and limitations of various methods. Two common approaches include MinMaxScaler and StandardScaler (opens new window), each offering unique benefits based on specific use cases.

# MinMaxScaler vs StandardScaler

# Definition and Use Cases

- MinMaxScaler transforms features by scaling them to a specified range, typically between 0 and 1. This normalization technique is particularly useful when the distribution of features is not Gaussian or when outliers are present. On the other hand, StandardScaler standardizes features by removing the mean and scaling to unit variance. It is more appropriate for normally distributed data without significant outliers.

# Pros and Cons

- MinMaxScaler ensures that all features are within a uniform range, making it ideal for algorithms sensitive to varying scales. However, it may be influenced by outliers, impacting the overall performance. In contrast, StandardScaler maintains the shape of the original distribution while reducing the impact of outliers. Yet, it assumes that data follows a Gaussian distribution, which may not always hold true in practice.

Another critical comparison lies between StandardScaler and RobustScaler, emphasizing their distinctive characteristics in different scenarios.

# StandardScaler vs RobustScaler

# Definition and Use Cases

- While StandardScaler is sensitive to outliers due to its reliance on mean and variance calculations, RobustScaler employs robust statistics to scale features based on median and interquartile range. This makes it more resilient against outliers, ensuring stable performance even with skewed data distributions.

# Pros and Cons

- The advantage of using StandardScaler lies in its ability to preserve information about the shape of the distribution while normalizing feature values. However, its susceptibility to outliers can affect model accuracy. Conversely, RobustScaler offers a robust solution for outlier handling but may struggle with non-Gaussian data distributions (opens new window) where median-based scaling might not be suitable (opens new window).

When selecting an appropriate technique for feature scaling, understanding dataset characteristics and model requirements is paramount to achieving optimal results.

# Choosing the Right Technique

# Dataset Characteristics

- Consider the nature of your dataset, including its distribution shape, presence of outliers, and scale variations among features. Tailoring your scaling technique to these characteristics can enhance model performance significantly.

# Model Requirements

- Evaluate the specific requirements of your machine learning model concerning feature scales and potential impacts of outliers on algorithm behavior. Choosing a technique that aligns with these requirements ensures robustness and accuracy in model predictions.

# Impact on KNN

# Baseline Accuracy (opens new window)

When training the KNN algorithm on unscaled data, the model may struggle to accurately identify nearest neighbors due to varying feature scales. This can lead to suboptimal performance and reduced accuracy in classification tasks. However, by scaling features appropriately, the algorithm can effectively mitigate these challenges and improve its predictive capabilities.

# Model Comparisons

Analyzing accuracy metrics and conducting ROC curve analysis provides valuable insights into the performance of the KNN algorithm with and without feature scaling. By comparing results between training on unscaled data and training using scaled features, practitioners can observe the significant impact of feature scaling on model accuracy and efficiency. These comparisons highlight the necessity of incorporating proper feature scaling techniques to elevate the KNN algorithm's effectiveness in handling diverse datasets.

# Practical Implementation

# Coded Data Examples

To demonstrate the significance of feature scaling in a practical setting, consider a scenario where a dataset contains two features: age and income. The age feature ranges from 0 to 100, while income varies between 0 and 1,000,000. Without scaling the data, the distance calculations in the KNN algorithm (opens new window) would be heavily influenced by the income feature due to its larger scale. This dominance can lead to inaccurate classifications as the algorithm prioritizes magnitude over relevance.

# DataFrame for Scaled Features

By implementing MinMaxScaler or StandardScaler, you can transform these features into a comparable range. For instance, applying MinMaxScaler would normalize both age and income values between 0 and 1. On the other hand, StandardScaler would standardize these features based on mean and variance, ensuring fair contributions during model training.

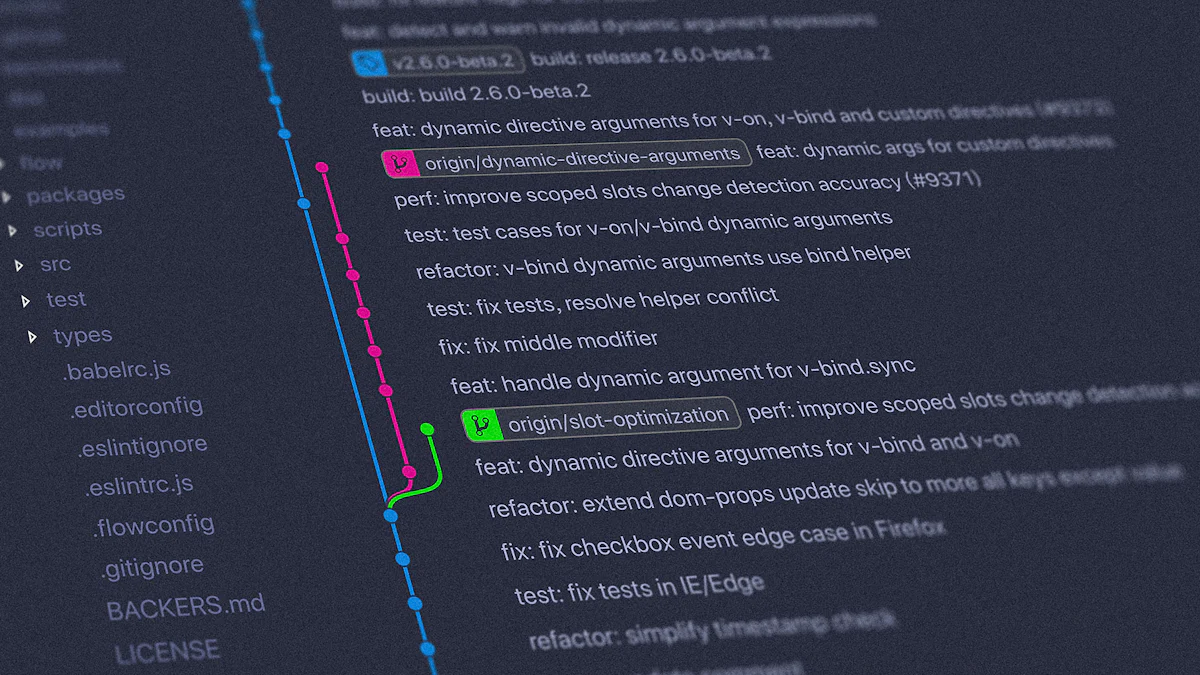

# Saving Scaling Transformation

After scaling your features for optimal KNN performance, it's essential to save this transformation for future use. By saving the scaling parameters post-training, you can efficiently apply them to new datasets or models without repeating the preprocessing steps.

# Saving

Utilize libraries like scikit-learn (opens new window) to save your scaling transformation as a file or object. This process ensures consistency in feature scaling across different projects and facilitates seamless integration into your machine learning pipelines.

# Reusing in Future Models

When working on subsequent models or datasets with similar feature characteristics, reloading your saved scaling transformation can streamline preprocessing tasks. This approach not only enhances reproducibility but also maintains consistency in model inputs for improved accuracy.

In conclusion, feature scaling stands as a pivotal step in machine learning, ensuring fair model predictions and stable algorithm performance. By standardizing feature values, practitioners can prevent biases and enhance the accuracy of nearest neighbor algorithms (opens new window). Techniques like MinMaxScaler and StandardScaler (opens new window) offer distinct advantages based on data characteristics, impacting model convergence speed and efficiency. Moving forward, embracing feature scaling advancements will be crucial for practitioners to elevate model accuracy and navigate complex datasets effectively.