Machine learning (opens new window) has become a driving force behind creativity and innovation across industries like healthcare and finance. Thanks to libraries like JAX (opens new window) and PyTorch (opens new window), building advanced neural networks (opens new window) has become more accessible, especially with the growth of deep learning. These tools simplify the development process by handling the heavy lifting of complex math, allowing developers and researchers to focus more on improving models rather than getting stuck in the technical details. As a result, deep learning has become more approachable, speeding up the development of AI applications (opens new window).

In this blog post, we'll dive into what makes JAX and PyTorch stand out, how they perform, and when you might want to use one over the other. By understanding the strengths of each, you can make smarter choices for your machine learning projects, whether you're a researcher experimenting with new algorithms or a developer building real-world AI solutions. This comparison will guide you in selecting the right framework to match your project's needs.

# Why Libraries Matter in Deep Learning

Specialized libraries play a very important role in deep learning by streamlining the development and training of complex models. By simplifying mathematical complexities, these libraries enable developers to concentrate on architecture and creativity. They enhance performance by utilizing features such as GPU acceleration (opens new window), which allows for effective management of extensive data. Moreover, the abundant ecosystems and support from the community that encompass these libraries encourage teamwork and quick experimentation, leading to progress in AI applications.

To demonstrate these advantages, let's explore two well-known libraries: JAX and PyTorch, both providing distinct features and abilities to serve various requirements in the field of deep learning.

# Exploring PyTorch (opens new window): Features and Benefits

PyTorch, developed by Facebook's AI Research lab, has rapidly become a leading open-source platform for deep learning projects. PyTorch is famous for its user-friendly interface and powerful capabilities, which make it perfect for both researchers and developers. Users can create and modify neural networks in real-time thanks to its adaptable computation graph (opens new window), which facilitates rapid experimentation and streamlines the debugging procedure. This flexibility allows professionals to come up with innovative ideas without being constrained by rigid systems, ultimately speeding up the development of complex models. Furthermore, PyTorch's widespread adoption across both industry and research makes it the dominant framework, while JAX is primarily used in research groups and has not achieved the same level of popularity in production environments.

The library focuses on performance by using GPU acceleration to effectively handle demanding computational tasks. Furthermore, PyTorch provides a range of libraries and tools that improve its capabilities, making it a versatile choice for different uses like computer vision (opens new window) and natural language processing. PyTorch encourages teamwork and the exchange of ideas to enhance personal projects and progress the field of deep learning overall.

# Enhanced Model Development

PyTorch's dynamic computation graphs enable making changes to neural network architectures in real-time. This aspect is highly beneficial for research as it allows for rapid improvements in model performance, particularly in natural language processing and computer vision tasks.

# Customization for Diverse Use Cases

Developers have access to a wide range of customization options in PyTorch. Its adaptability enables tailored project needs to be met in a wide range of fields such as healthcare, finance, and others, whether adjusting hyperparameters or utilizing innovative training methods.

# Ideal Use Cases

- Research and Development: Quick creation of cutting-edge algorithms through rapid prototyping.

- Computer Vision: Sophisticated image processing applications enabled by libraries such as torchvision.

- Natural Language Processing: Effective management of ordered information for tasks such as sentiment analysis.

# Coding Example

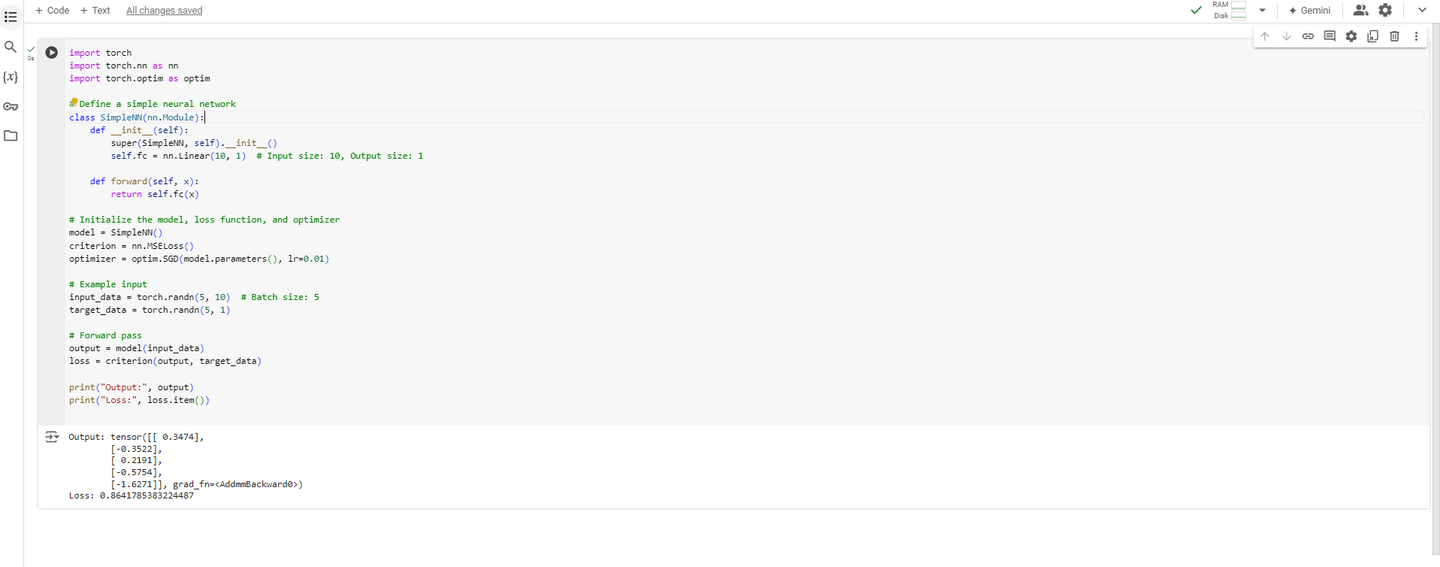

Here is a simple example of defining and using a neural network in PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

# Define a simple neural network

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc = nn.Linear(10, 1) # Input size: 10, Output size: 1

def forward(self, x):

return self.fc(x)

# Initialize the model, loss function, and optimizer

model = SimpleNN()

criterion = nn.MSELoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

# Example input

input_data = torch.randn(5, 10) # Batch size: 5

target_data = torch.randn(5, 1)

# Forward pass

output = model(input_data)

loss = criterion(output, target_data)

print("Output:", output)

print("Loss:", loss.item())

Here’s what this code looks like in action:

This example illustrates how to create a simple neural network using PyTorch. It features a fully connected layer that transforms input data of size 10 to an output of size 1. After setting up the model and loss function, a forward pass is performed on random input data, calculating the mean squared error loss against target data.

# Strong Community Support

The vibrant PyTorch community provides abundant resources like documentation, tutorials, and forums, enabling developers to enhance their productivity and creativity with the required support.

# Discovering JAX (opens new window): Features and Benefits

JAX, developed by Google, is an inventive open-source library specifically designed for high-speed numerical computing and machine learning. Recognized for its focus on automatic differentiation (opens new window) and composability, JAX enables researchers and developers to create intricate models effectively. Its ability to smoothly integrate with NumPy and support for hardware acceleration on GPUs and TPUs makes it an attractive choice for those aiming to maximize computational performance. By utilizing JAX, users can create precise and expressive code, resulting in significant speed improvements during model training and inference.

JAX also encourages a functional programming style, which not only promotes code reusability but also enhances readability and maintainability. This focus on composability allows developers to create sophisticated algorithms with ease, making it a valuable tool for both academic research and industry applications. JAX has external libraries, such as Flax and Haiku, for building neural networks, but these ecosystems are still relatively weak compared to PyTorch's more mature and widely adopted offerings. Despite its advantages, JAX has not gained widespread popularity and remains primarily used within research groups rather than in mainstream production environments.

# Optimized Model Development

JAX stands out with its automatic differentiation and XLA (Accelerated Linear Algebra) compilation (opens new window). These features allow for optimized performance on GPUs and TPUs, enabling faster model training and inference. Researchers can implement cutting-edge algorithms and see results more quickly, making JAX a valuable tool in the deep learning landscape.

# Customization and Flexibility

JAX provides extensive customization options, allowing developers to compose complex functions and transformations easily. This flexibility is particularly beneficial in research environments where innovative approaches are essential. JAX’s functional programming paradigm supports the creation of reusable components, fostering rapid experimentation and iteration.

# Ideal Use Cases

- Scientific Research: Accelerating simulations and model development in fields like physics and biology.

- Machine Learning: Implementing state-of-the-art algorithms with efficient automatic differentiation.

- High-Performance Computing: Leveraging JAX for complex computations requiring optimized performance.

# Coding Example

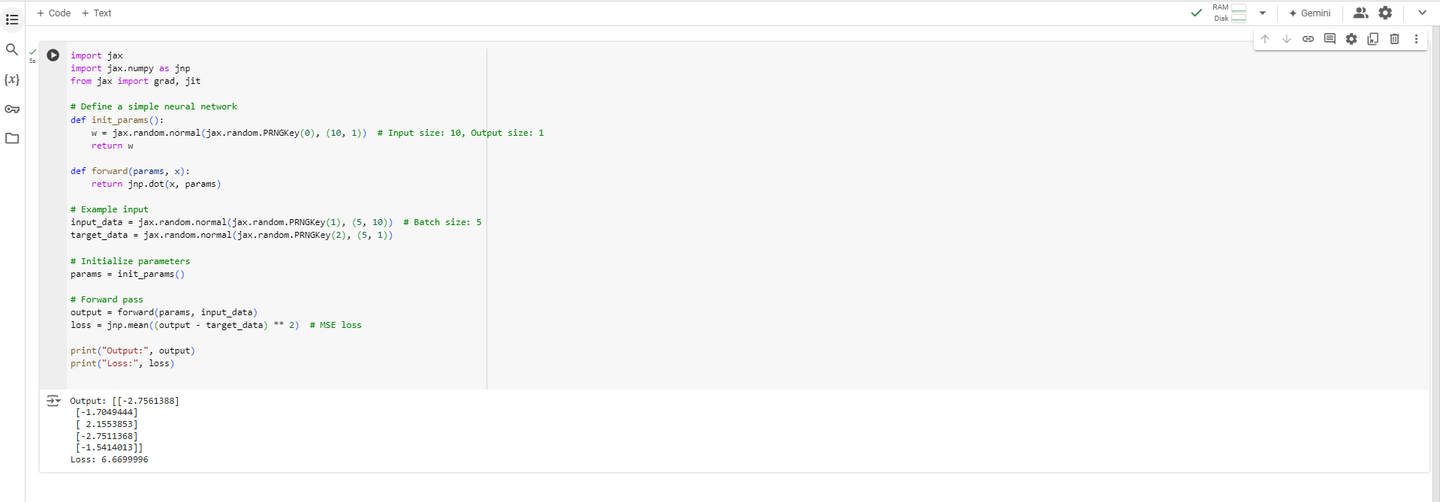

Here is a simple example of defining and using a neural network in JAX:

import jax

import jax.numpy as jnp

from jax import grad, jit

# Define a simple neural network

def init_params():

w = jax.random.normal(jax.random.PRNGKey(0), (10, 1)) # Input size: 10, Output size: 1

return w

def forward(params, x):

return jnp.dot(x, params)

# Example input

input_data = jax.random.normal(jax.random.PRNGKey(1), (5, 10)) # Batch size: 5

target_data = jax.random.normal(jax.random.PRNGKey(2), (5, 1))

# Initialize parameters

params = init_params()

# Forward pass

output = forward(params, input_data)

loss = jnp.mean((output - target_data) ** 2) # MSE loss

print("Output:", output)

print("Loss:", loss)

Here’s what this code looks like in action:

This example demonstrates a basic neural network implementation in JAX, focusing on a functional programming approach. The model uses a dot product to process input data and compute the output. Random input and target data are generated, and the mean squared error loss is calculated during the forward pass, showcasing JAX's efficiency and concise coding style.

# Engaged Community and Resources

The JAX community is growing, with a wealth of resources available, including documentation, tutorials, and active forums. This support network helps developers maximize their productivity and encourages collaboration across projects.

# PyTorch vs. JAX: A Comparative Overview

When choosing between PyTorch and JAX for deep learning applications, it's essential to consider their distinct features, advantages, and ideal use cases. Below is a comparison table that highlights the key differences and similarities between these two powerful libraries.

| Feature | PyTorch | JAX |

|---|---|---|

| Developer | Facebook AI Research | |

| Primary Use | Deep learning, computer vision, NLP | High-performance numerical computing, ML |

| Computation Graph | Dynamic computation graph | Functional programming with composability |

| Automatic Differentiation | Yes, with an easy-to-use interface | Yes, highly efficient with XLA compilation |

| Hardware Acceleration | Optimized for GPUs and CPUs | Optimized for GPUs and TPUs |

| Ecosystem | Rich ecosystem with many libraries (e.g., torchvision, torchaudio, torchtext) supporting a wide range of deep learning tasks. | Integrates well with NumPy and other scientific computing tools but has a more limited ecosystem for neural networks. |

| User Interface | Intuitive, suitable for beginners | More suited for users familiar with functional programming |

| Community Support | Large and active community | Growing community with expanding resources |

| Customization | Extensive options for model customization | High flexibility for composing functions |

| Ideal Use Cases | Research, prototyping, production deployment | Scientific computing, high-performance ML |

This table provides a quick overview of the strengths and considerations for both Pytorch and JAX, helping you decide which tool best suits your project's needs.

# Conclusion

In conclusion, both JAX and PyTorch offer distinctive advantages for deep learning projects, making them valuable tools for both researchers and developers. JAX is perfect for advanced computing and automatic differentiation, enabling users to efficiently develop complex algorithms. On the other hand, PyTorch excels in rapid prototyping and has a user-friendly interface, simplifying the creation of intricate models without the need for extensive training.

In the end, the decision between these two frameworks should be based on your project requirements and personal preferences, whether you value speed or user-friendliness. However, PyTorch’s widespread adoption and extensive ecosystem make it the dominant choice, while JAX remains primarily within research groups. Both libraries enable developers to create adaptable and expandable models, driving innovation in various AI applications.