Machine learning is a fascinating field that empowers computers to learn and make decisions without explicit programming. Among the plethora of algorithms, the k-nearest neighbor algorithm (opens new window) stands out for its simplicity and effectiveness. Understanding this algorithm is crucial as it finds applications in diverse domains like disease risk prediction (opens new window), recommender systems (opens new window), sentiment analysis, and more. In this blog, we will delve into the seven steps to master KNN and unlock its potential.

# Step 1: Understanding KNN

What is KNN?

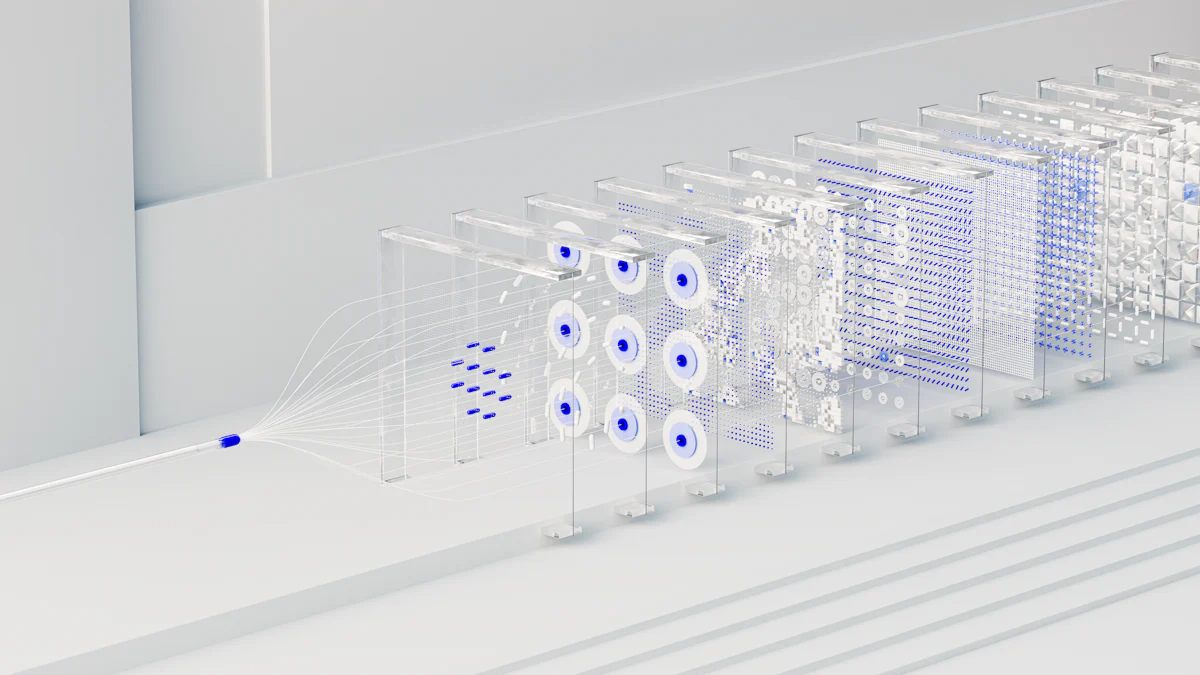

The k-nearest neighbor algorithm (KNN) is a fundamental machine learning technique (opens new window) used for both classification and regression tasks. Its simplicity and flexibility make it a popular choice in various real-world applications. KNN operates by identifying the similarity between data points based on feature proximity. By considering the 'k' closest neighbors to a given data point, the algorithm predicts its classification or regression output.

# Definition and purpose

KNN classifies new data points by comparing them to existing labeled data. The algorithm assigns the most common class among the nearest neighbors to the new point, making it an effective classifier (opens new window).

# How KNN works

In KNN, each data point influences the decision-making process equally. By calculating distances between points using metrics like Euclidean or Manhattan distance (opens new window), KNN determines the closest neighbors and predicts outcomes based on (opens new window) their classes or values.

# Applications of KNN

KNN finds extensive use in various domains due to its simplicity and adaptability.

# Classification

In classification tasks, KNN assigns a class label to a new data point based on the majority class of its nearest neighbors. This approach makes it suitable for scenarios where decision boundaries are non-linear or complex.

# Regression

For regression problems, KNN predicts continuous values by averaging or weighting the target values of neighboring points. This method proves valuable when predicting numerical outcomes based on similar instances in the training set.

# Step 2: Preparing Data

# Data Collection

# Importance of quality data

Quality data is the cornerstone of any successful machine learning project. Without accurate and relevant data, the performance of algorithms like KNN can be compromised. Ensuring that your dataset is clean, consistent, and free from errors is vital for obtaining reliable predictions. By collecting high-quality data, you set a strong foundation for the success of your KNN model.

# Sources of data

Data can be obtained from various sources, each offering unique insights and challenges. Common sources include online repositories, surveys, sensor readings, and databases. Leveraging diverse data sets enhances the robustness of your model and enables it to make more accurate predictions. Exploring different sources allows you to capture a comprehensive view of the problem at hand and improve the overall performance of your KNN algorithm.

# Data Preprocessing

# Normalization (opens new window)

Normalization is a crucial step in preparing your data for KNN analysis. This process involves scaling the features to a standard range to prevent any single feature from dominating the distance calculations. By normalizing the data, you ensure that all attributes contribute equally to the similarity measurements. This step optimizes the performance of KNN by eliminating biases caused by varying scales among features.

# Handling missing values (opens new window)

Dealing with missing values is a common challenge in real-world datasets. These gaps can hinder the accuracy of your KNN model if not addressed properly. Implementing strategies such as imputation or removal of incomplete records helps maintain the integrity of your dataset. By handling missing values effectively, you enhance the reliability and predictive power of your KNN algorithm.

# Step 3: Implementing KNN

# Choosing the value of k

When implementing the KNN algorithm, selecting the appropriate value for 'k' is crucial. The choice of 'k' directly impacts the model's performance, influencing its ability to make accurate predictions. Different 'k' values can lead to either overfitting or underfitting (opens new window), affecting the algorithm's effectiveness in handling new data points.

# Impact of k on performance

The value of 'k' plays a significant role in determining how the algorithm generalizes patterns from the training data to classify or predict new instances. Optimal 'k' values prevent over-reliance on noise (opens new window) in the data, ensuring robust and reliable outcomes. By understanding the impact of 'k' on performance, you can fine-tune your model for better results.

# Methods to select k

Various methods exist to determine the most suitable 'k' value for your KNN model. From cross-validation techniques to grid search algorithms, each approach aims to optimize model accuracy by identifying the best 'k.' Experimenting with different 'k' values allows you to evaluate their impact on prediction quality and refine your model accordingly.

# Running the Algorithm

Once you have chosen an optimal 'k' value, it's time to run the KNN algorithm on your dataset. Leveraging libraries and tools like scikit-learn (opens new window) in Python simplifies implementation and streamlines the process. By feeding your preprocessed data into the algorithm, you can observe how KNN classifies or predicts outcomes based on feature similarity.

# Using libraries and tools

Libraries such as scikit-learn provide efficient implementations of KNN, offering a user-friendly interface for machine learning tasks. These tools enable seamless integration of KNN into your workflow, allowing you to focus on analyzing results rather than intricate coding details. Utilizing libraries enhances productivity and facilitates quick experimentation with different parameters.

# Interpreting results

Interpreting KNN results involves analyzing how well the algorithm performs on unseen data. By evaluating metrics like accuracy, precision, and recall, you gain insights into its predictive capabilities. Understanding how KNN makes decisions based on neighbor proximity enhances your grasp of its functioning and guides further improvements in model accuracy.

Recapping the essence of the k-nearest neighbor algorithm reveals its versatility in predicting patient outcomes (opens new window), disease risk factors, and personalized treatments across healthcare, image recognition, and finance domains. The seven steps outlined provide a structured approach to mastering KNN, from understanding its fundamentals to implementing it effectively. Looking ahead, embracing future developments in KNN applications like cancer diagnosis in self-driving cars (opens new window) and credit risk analysis opens doors to enhanced portfolio management strategies. Embrace the power of KNN for accurate predictions and informed decision-making.