The k nearest neighbor algorithm, commonly known as KNN, is a fundamental technique in the realm of machine learning. Its simplicity and effectiveness (opens new window) make it a popular choice for classification tasks. Understanding the nuances of this algorithm is crucial for aspiring data scientists. MATLAB, a powerful tool for scientific computing, provides a seamless platform for implementing KNN models with ease.

# Setting Up MATLAB for KNN

# Installing MATLAB

# System Requirements

To install MATLAB successfully, ensure your computer meets the minimum system requirements. You can refer to the Quick Start Guide (opens new window) for detailed instructions on installing MATLAB with an Individual license.

# Installation Steps

Download the MATLAB installer from the official website.

Run the installer and choose the installation folder.

Follow the on-screen instructions to complete the installation process.

# Preparing the Data

# Data Format

When preparing data for KNN in MATLAB, ensure it is structured with N rows representing points and M columns representing features.

# Importing Data into MATLAB

Use built-in functions like

readtableorcsvreadto import data.Verify that the imported data aligns with your dataset structure before proceeding with KNN implementation.

# Implementing the KNN Algorithm

# Understanding the KNN Algorithm

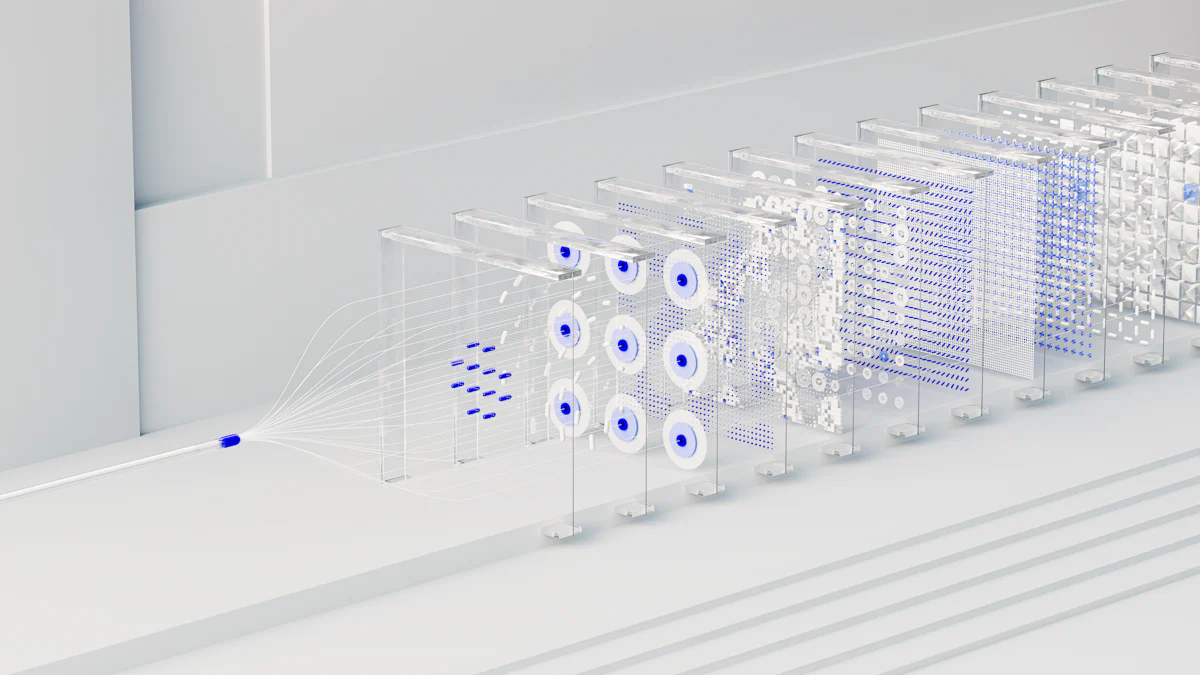

To grasp the essence of KNN algorithm, one must comprehend its basic principles. The algorithm classifies data points based on their similarity to training instances. This technique is particularly useful in scenarios where decision boundaries are not linear, making it a versatile tool for various classification tasks. Understanding these fundamental concepts is essential before delving into practical applications.

Use cases of the KNN algorithm span across multiple domains. From image recognition to recommendation systems, KNN proves its efficacy in diverse fields. For instance, in medical diagnosis, KNN can analyze patient data and predict potential illnesses based on similarities with existing cases. Similarly, in e-commerce, it can recommend products to customers by identifying patterns in their preferences and behaviors.

# Coding the KNN Algorithm in MATLAB

Implementing the KNN algorithm in MATLAB involves utilizing the fitcknn function. This function allows users to train a classifier using labeled data and make predictions on new observations seamlessly. By leveraging this built-in functionality, users can streamline the implementation process and focus on interpreting results rather than intricate coding details.

When coding KNN in MATLAB, an example code snippet can provide clarity on its application. By following a structured approach and understanding how to input data correctly, users can harness the power of KNN for their classification tasks effectively.

# Optimizing KNN Parameters

# Choosing the Right K

Impact of K on performance:

A crucial aspect in optimizing a K-nearest neighbor (KNN) model is selecting the appropriate value for K. The choice of K significantly influences the model's performance. A small K value can lead to overfitting (opens new window), where the model is too complex and captures noise in the data, while a large K value may result in underfitting (opens new window), causing the model to oversimplify and overlook patterns.

Methods to select K:

When determining the optimal K for a KNN algorithm, various methods can be employed. One common approach is cross-validation (opens new window), where different values of K are tested on subsets of the training data to evaluate their impact on performance. Additionally, techniques like grid search (opens new window) can systematically explore a range of K values to identify the one that yields the best results based on predefined metrics.

# Distance Metrics

Different distance metrics:

In K-nearest neighbor (KNN) algorithms, choosing an appropriate distance metric is essential for accurate classification. Common distance metrics include Euclidean distance (opens new window), Manhattan distance (opens new window), and Minkowski distance (opens new window). Each metric calculates distances differently based on the characteristics of the data and can affect how nearest neighbors are identified.

Choosing the best metric for your data:

Selecting the most suitable distance metric depends on the nature of your dataset and its features. For instance, Euclidean distance works well for continuous numerical data, while Hamming distance (opens new window) is more suitable for categorical variables. Understanding the properties of each metric and their compatibility with your data attributes is crucial in optimizing the performance of your KNN model.

Summarize the essential concepts learned about the K-nearest neighbor (KNN) algorithm (opens new window) in MATLAB.

Emphasize the significance of hands-on practice and experimentation to master KNN implementation effectively.

Explore advanced resources for further study, such as academic papers on optimizing KNN parameters and practical projects to enhance your skills.