In the realm of machine learning (opens new window), understanding KNN classification (opens new window) is crucial for data scientists and ML engineers. This algorithm (opens new window), known for its simplicity and accuracy, does not assume data patterns, leading to higher precision compared to other classifiers. Mastering KNN in Python offers a gateway to solving real-world problems efficiently. This blog will delve into the fundamentals of KNN, its implementation in Python, and provide valuable tips for optimal mastery.

# Understanding KNN Classification (opens new window)

When delving into KNN classification, it is essential to grasp the core concepts that define this algorithm. KNN stands for k-nearest neighbors, a method that classifies data points based on their similarity to neighboring points. This approach does not make any assumptions about the underlying data distribution (opens new window), making it particularly useful in scenarios where the decision boundary is highly irregular.

# What is KNN Classification?

# Definition and Basic Concepts

At its essence, KNN classification assigns a class label to an unseen data point based on the majority class of its nearest neighbors. This process involves calculating the distance between the new point and existing data points to determine its classification. By considering the labels of nearby instances (opens new window), KNN can effectively predict the category of the input data.

# How KNN Works

The functioning of KNN revolves around proximity-based reasoning (opens new window). When presented with a new observation, the algorithm identifies its k-nearest neighbors in the training set. These neighbors then collectively decide the class membership of the new point through a voting mechanism. The simplicity and intuitiveness of this process contribute to KNN's popularity in various machine learning applications.

# Advantages and Disadvantages

# Benefits of Using KNN

Flexibility (opens new window): KNN adapts well to different types of datasets without imposing strong assumptions.

Interpretability: The decision-making process of KNN is transparent and easy to understand.

Versatility: It can handle both classification and regression tasks (opens new window) with relative ease.

# Limitations of KNN

Computational Complexity: As all data points are retained for predictions, KNN can be computationally intensive.

Sensitivity to Outliers: Extreme values in the dataset can significantly impact KNN's performance.

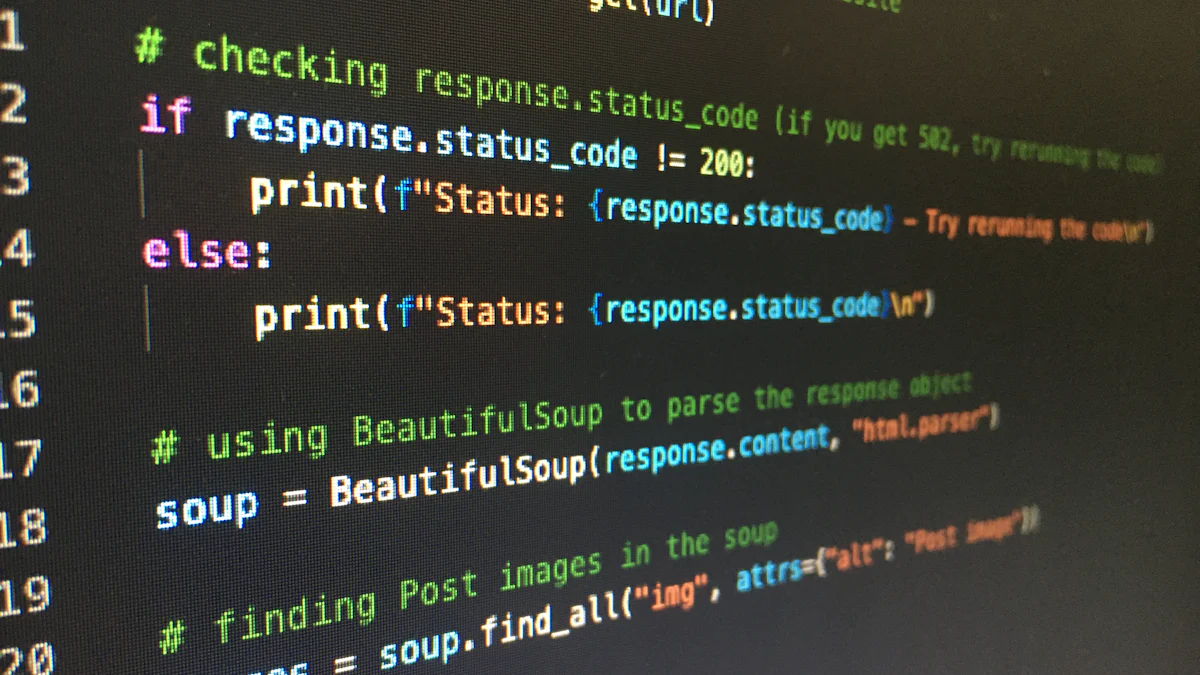

# Implementing KNN in Python

To implement KNN in Python, one must first set up the environment by ensuring the necessary libraries are installed. The scikit-learn (opens new window) library provides a user-friendly interface for various machine learning algorithms, including KNN. Installing this library is essential for seamless implementation.

# Setting Up the Environment

# Required Libraries

- Import the required libraries:

numpy (opens new window): For efficient numerical operations.

pandas (opens new window): For data manipulation and analysis.

matplotlib (opens new window): For data visualization.

scikit-learn: For machine learning implementations.

# Installation Steps

- Install the scikit-learn library using pip:

pip install scikit-learn

- Verify the installation by importing scikit-learn in your Python environment.

# Step-by-Step Implementation

Once the environment is set up, proceed with implementing KNN in Python following these steps:

# Data Preparation

Load your dataset into a pandas DataFrame.

Preprocess the data by handling missing values and encoding categorical variables if needed.

Split the dataset into training and testing sets to evaluate model performance accurately.

# Building the KNN Model

Create an instance of the KNeighborsClassifier from scikit-learn.

Fit the model on the training data to allow it to learn patterns from the features.

Adjust hyperparameters (opens new window) like k (number of neighbors) based on model performance.

# Evaluating the Model

Make predictions on the test set using the trained KNN model.

Assess model accuracy using evaluation metrics such as accuracy, precision, recall, or F1-score.

Fine-tune parameters or consider feature engineering to enhance model performance further.

By following these steps diligently, one can successfully implement and leverage KNN classification in Python for various machine learning tasks.

# Tips for Mastery

# Best Practices

When aiming to master KNN classification, selecting the right value for k is paramount. The choice of k significantly influences the model's performance and generalization capabilities. To determine the optimal k, data scientists often resort to techniques like cross-validation, where different values are tested to identify the most suitable one for the dataset at hand.

Handling large datasets efficiently is another crucial aspect of excelling in KNN classification. With substantial amounts of data, computational resources can be strained, leading to longer processing times. Employing dimensionality reduction (opens new window) techniques or utilizing parallel computing frameworks can streamline operations and enhance the scalability of the model.

# Common Pitfalls

In the realm of machine learning, overfitting (opens new window) poses a common challenge when working with KNN classification models. Overfitting occurs when the algorithm captures noise in the training data as if it were a pattern, resulting in poor performance on unseen data. To mitigate this issue, practitioners often employ strategies like feature selection or regularization to prevent the model from becoming overly complex.

Conversely, underfitting (opens new window) represents another pitfall that individuals mastering KNN classification should be wary of. Underfitting transpires when the model is too simplistic to capture underlying patterns in the data effectively. To combat underfitting, adjusting hyperparameters or incorporating more relevant features into the model can enhance its predictive capabilities.

Revisiting the essence of KNN classification unveils its power in making accurate predictions based on data proximity. To excel in this domain, consistent practice and a thirst for knowledge are indispensable. Embrace real-world challenges to apply your newfound expertise effectively. Remember, mastery is a journey that rewards dedication and application. Keep honing your skills, and watch as your understanding of KNN in Python transforms into impactful solutions for diverse projects.