KNN (opens new window) modeling stands as one of the oldest, simplest, and most accurate algorithms for pattern classification and text recognition. Its versatility shines through in scenarios where obtaining labeled data is either costly or unfeasible. This algorithm finds its applications in various fields, such as the healthcare industry, aiding in predicting risks like heart attacks and prostate cancer based on gene expressions. Moreover, KNN serves as a fundamental tool in Computer Vision (opens new window) and Content Recommendation, excelling in tasks like image recognition and video analysis.

# Understanding KNN

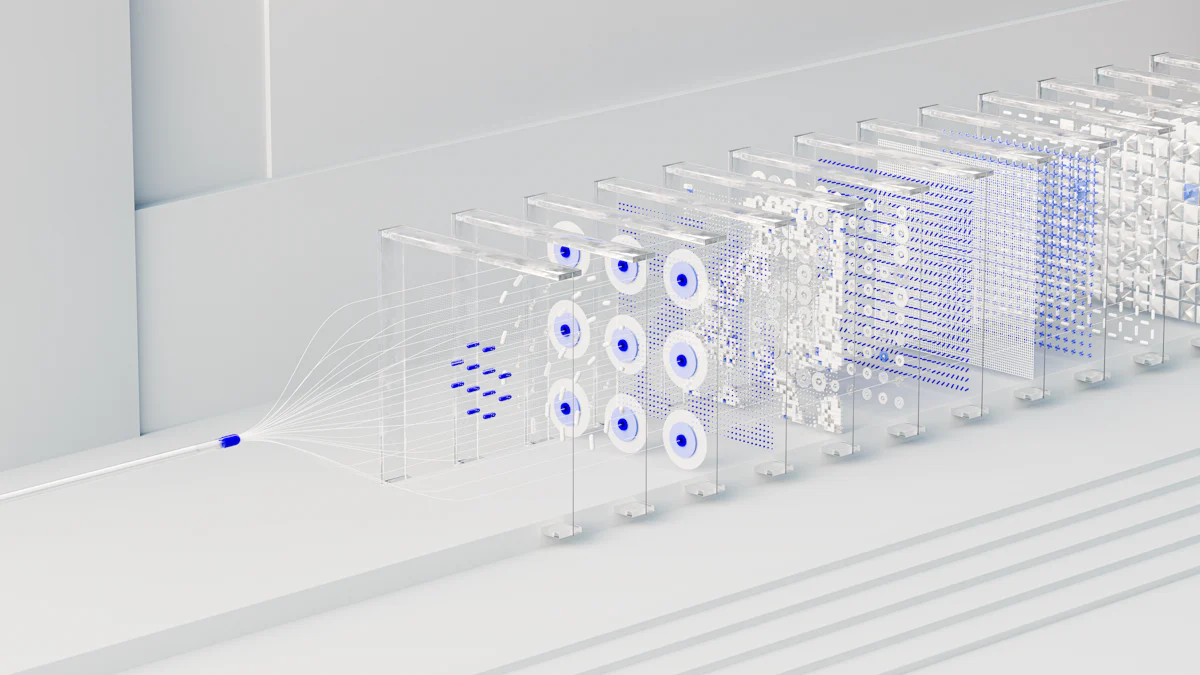

KNN, or K-Nearest Neighbors, is a straightforward yet powerful algorithm in the realm of machine learning. Definition wise, it classifies a new data point based on the majority class of its k-nearest neighbors. Its key characteristics include simplicity, efficiency in small datasets, and sensitivity to irrelevant features.

In terms of Distance measures (opens new window), KNN commonly employs Euclidean distance to determine proximity between data points. When it comes to Classification vs. regression, KNN can be used for both tasks. For instance, in text categorization, it excels at assigning documents to specific categories based on their similarity to other documents.

The Applications of KNN are diverse and impactful. In real-world scenarios, such as personalized recommendations on e-commerce platforms or content suggestions on streaming services, KNN shines through its ability to recommend items based on user behavior patterns (opens new window).

# Implementing KNN

When it comes to Implementing KNN, the initial step is Preparing Data. This involves Data cleaning to ensure the dataset is free from inconsistencies and errors that could impact the model's performance. Additionally, Feature selection plays a crucial role in enhancing the model's accuracy by choosing the most relevant attributes for prediction.

Moving on to Building the Model, one must carefully consider the Choosing k value process. Selecting an optimal k value is essential as it directly influences the model's behavior and performance. Subsequently, Training the model involves feeding the algorithm with labeled data to enable it to make accurate predictions based on similarities with existing data points.

Once the model is built, Evaluating the Model becomes imperative. This stage involves assessing its performance using various metrics such as accuracy metrics and cross-validation techniques (opens new window). By analyzing these metrics, one can determine how well the model generalizes to unseen data and make necessary adjustments for improvement.

In a practical scenario, understanding different sampling methods and parameter changes (opens new window) can provide valuable insights into implementing KNN effectively. These insights delve into the intuition behind KNN and potential challenges that may arise during implementation, guiding practitioners towards making informed decisions for optimal results.

# Tips for Effective KNN

# Optimizing Performance

Scaling data plays a crucial role in enhancing the efficiency of the KNN algorithm. By normalizing the data (opens new window), each feature contributes equally to the distance calculations, preventing attributes with larger magnitudes from dominating the process.

Handling imbalanced data is essential to ensure accurate predictions. Techniques such as oversampling or undersampling can address this issue by adjusting the class distribution, allowing KNN to make fair and unbiased decisions.

# Avoiding Common Pitfalls

Overfitting (opens new window) can hinder the performance of KNN models. Regularization techniques (opens new window) like pruning or feature selection help prevent overfitting by simplifying the model and improving its generalization capabilities.

High dimensionality poses a challenge for KNN due to the curse of dimensionality. Dimensionality reduction methods (opens new window) like Principal Component Analysis (PCA) (opens new window) or feature extraction (opens new window) assist in reducing computational complexity and improving model efficiency.

# Advanced Techniques

Weighted KNN assigns different weights to neighbors based on their distance from the query point. This approach ensures that closer neighbors have a more significant impact on predictions, enhancing model accuracy in scenarios where certain neighbors are more relevant than others.

Using KNN in conjunction with other algorithms, such as Decision Trees (opens new window) or Random Forests (opens new window), can leverage the strengths of each method. Ensemble techniques (opens new window) combining KNN with other algorithms often result in improved predictive performance by harnessing diverse modeling approaches.

To summarize, mastering KNN involves understanding its core principles and implementing best practices for optimal results.

Practice is key in honing your skills with KNN modeling, as hands-on experience enhances proficiency and problem-solving abilities.

For further learning, exploring advanced techniques like weighted KNN and combining KNN with other algorithms can broaden your understanding of machine learning methodologies.