The Falcon model (opens new window) stands out as a cutting-edge tool in the realm of AI language processing. Its versatility and innovation make it a standout choice for various applications. Python, known for its extensive ecosystem in AI/ML, plays a crucial role in deploying such models efficiently. Understanding the steps involved to use Falcon model with Python opens doors to a world of possibilities.

# Setting Up the Environment

To begin Setting Up the Environment for deploying the Falcon model, it is essential to ensure that all the necessary libraries are installed correctly. This step is crucial for a seamless deployment process.

# Installing Required Libraries

Python and Pip: The first step involves installing Python and Pip. Python serves as the primary programming language for deploying AI models like Falcon, while Pip is a package installer used to manage Python packages efficiently.

Hugging Face Transformers (opens new window): Another critical library to install is Hugging Face Transformers. This library provides a wide range of pre-trained models, including Falcon, and simplifies the process of working with state-of-the-art natural language processing models.

# Configuring the Environment

After installing the required libraries, the next step is to configure the environment effectively to deploy the Falcon model successfully.

# Setting Up Virtual Environment

Creating a virtual environment isolates your project dependencies and ensures that different projects can have their own sets of libraries without conflicts. It's a best practice in Python development to maintain a clean and organized environment.

# Downloading Falcon Model

Once the virtual environment is set up, downloading the Falcon model is necessary to proceed with deployment. The Falcon model can be obtained from repositories such as Hugging Face's model hub, where various versions of Falcon are available for different use cases.

By following these steps diligently, you pave the way for a smooth deployment process of the Falcon model in your Python environment.

# Deploying the Falcon Model (opens new window)

# Loading the Model

To load the Falcon model effectively, users can leverage Python scripts or utilize Hugging Face Pipelines (opens new window) for seamless integration. Both methods offer a straightforward approach to initializing and utilizing the Falcon model within Python environments.

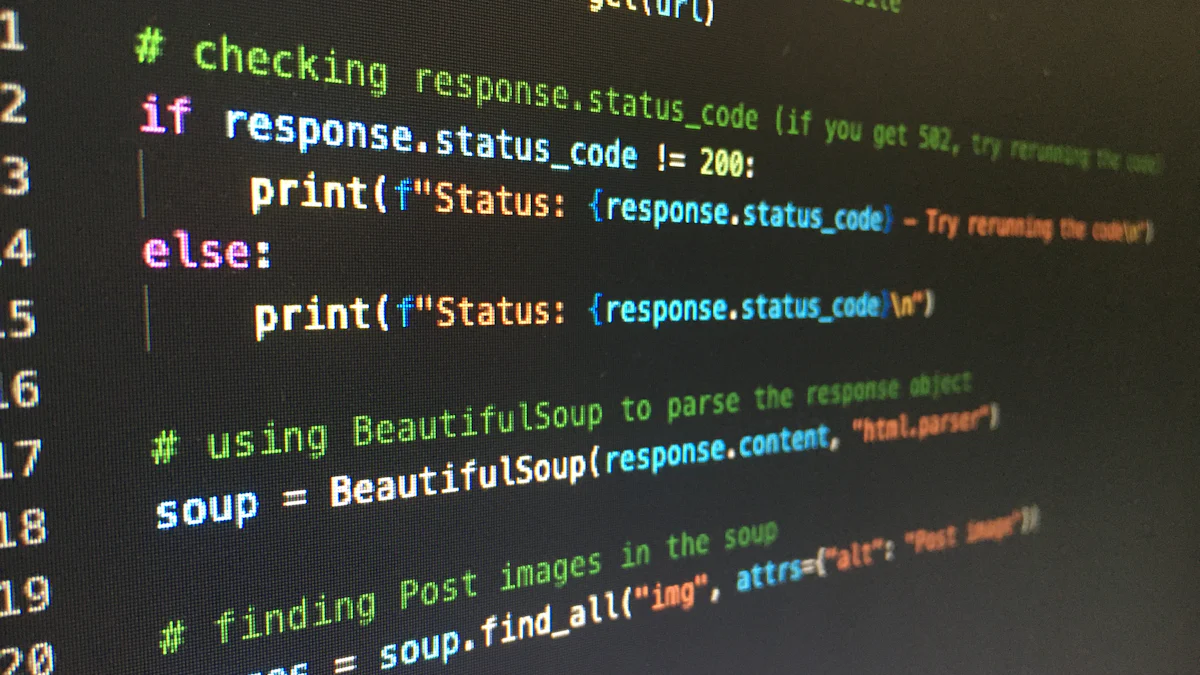

# Using Python Scripts

By employing Python scripts, developers can easily download the Falcon model from repositories and instantiate it locally. This method provides a high level of customization and control over the deployment process, allowing for specific configurations tailored to individual project requirements.

# Using Hugging Face Pipelines

Alternatively, Hugging Face Pipelines offer a more streamlined approach to loading the Falcon model. With pre-built functionalities and simplified interfaces, users can quickly access and utilize the Falcon model for various natural language processing tasks without delving into intricate coding details.

# Running Inference

Once the Falcon model is loaded successfully, users can proceed with running inferences for text generation (opens new window) and question answering (opens new window) applications. These capabilities showcase the versatility and power of the Falcon model in handling diverse NLP tasks with precision and efficiency.

# Text Generation

Text generation using the Falcon model enables users to create coherent and contextually relevant textual content. Whether generating creative narratives or informative passages, the Falcon model excels in producing human-like text outputs that cater to a wide range of applications.

# Question Answering

For question-answering tasks, the Falcon model proves invaluable in extracting accurate responses from given contexts or datasets. By leveraging its robust architecture and training on vast datasets, Falcon demonstrates exceptional performance in understanding queries and providing relevant answers effectively.

By mastering the art of deploying and utilizing the Falcon model through Python, users unlock a world of possibilities in natural language processing applications.

# Fine-Tuning and Optimization

# Fine-Tuning with LoRA (opens new window)

Falcon model's adaptability shines through its capacity for Fine-Tuning with LoRA (opens new window). This process enhances the model's performance by tailoring it to specific tasks, ensuring optimal results in various applications.

# Benefits of Fine-Tuning

Increased Precision: Fine-tuning Falcon models with LoRA boosts their accuracy and efficacy in handling complex language processing tasks.

Task Customization: Tailoring the model through fine-tuning allows users to optimize it for specific applications, enhancing its versatility.

Enhanced Performance: By fine-tuning with LoRA, Falcon models exhibit improved performance metrics, showcasing their adaptability and efficiency.

# Steps to Fine-Tune

Data Preparation (opens new window): Curate relevant datasets that align with the desired task objectives to initiate the fine-tuning process effectively.

Model Configuration: Customize the Falcon model parameters based on the task requirements to ensure optimal performance post fine-tuning.

Training Execution: Execute the fine-tuning process using LoRA techniques, iterating on the dataset to refine the model's capabilities gradually.

Validation and Testing: Validate the fine-tuned Falcon model through rigorous testing procedures to assess its performance and accuracy levels accurately.

# Optimizing Performance

In optimizing Falcon model's performance, users can explore key strategies that enhance efficiency and output quality significantly.

# Using CPU vs GPU

CPU Utilization (opens new window): Leveraging CPU resources offers a cost-effective solution for running Falcon models efficiently without compromising performance.

GPU Acceleration (opens new window): Utilizing GPU resources accelerates processing speeds significantly, making complex computations faster and more streamlined.

# Performance Comparison with ChatGPT (opens new window)

When comparing Falcon models' performance against ChatGPT, distinct differences emerge in terms of memory utilization and computational efficiency.

Falcon-40B: Requires approximately 90GB of GPU memory (opens new window), catering to extensive data processing needs (opens new window) efficiently.

Falcon-7B: Demonstrates lower memory requirements (~15GB), offering a more lightweight option for moderate-scale tasks.

By delving into fine-tuning methodologies like LoRA and optimizing performance through strategic resource allocation, users can maximize Falcon models' potential across diverse AI applications.

Falcon, an innovative large language model, offers a wide range of applications for commercial and industry use. The blog has highlighted the seamless deployment process of Falcon models (opens new window) in Python environments, emphasizing the significance of fine-tuning and optimization for enhanced performance. As Falcon surpasses other models (opens new window) on various leaderboards, professionals and enthusiasts are encouraged to explore its capabilities further within the Hugging Face ecosystem. The possibilities with Falcon are limitless, paving the way for groundbreaking advancements in natural language processing technologies.