In the realm of machine learning (opens new window), the significance of model size cannot be overstated. Enter quantization, a technique that revolutionizes model compression by reducing the number of bits (opens new window) needed to represent weights and activations. This blog aims to delve into the depths of quantization's impact on model size, exploring its role in enhancing efficiency without compromising accuracy.

# Understanding Quantization

Quantization plays a pivotal role in the realm of machine learning, offering a pathway to compress models without compromising accuracy. Let's explore the essence of quantization and its significance in the machine learning landscape.

# Definition and Basics

# What is Quantization?

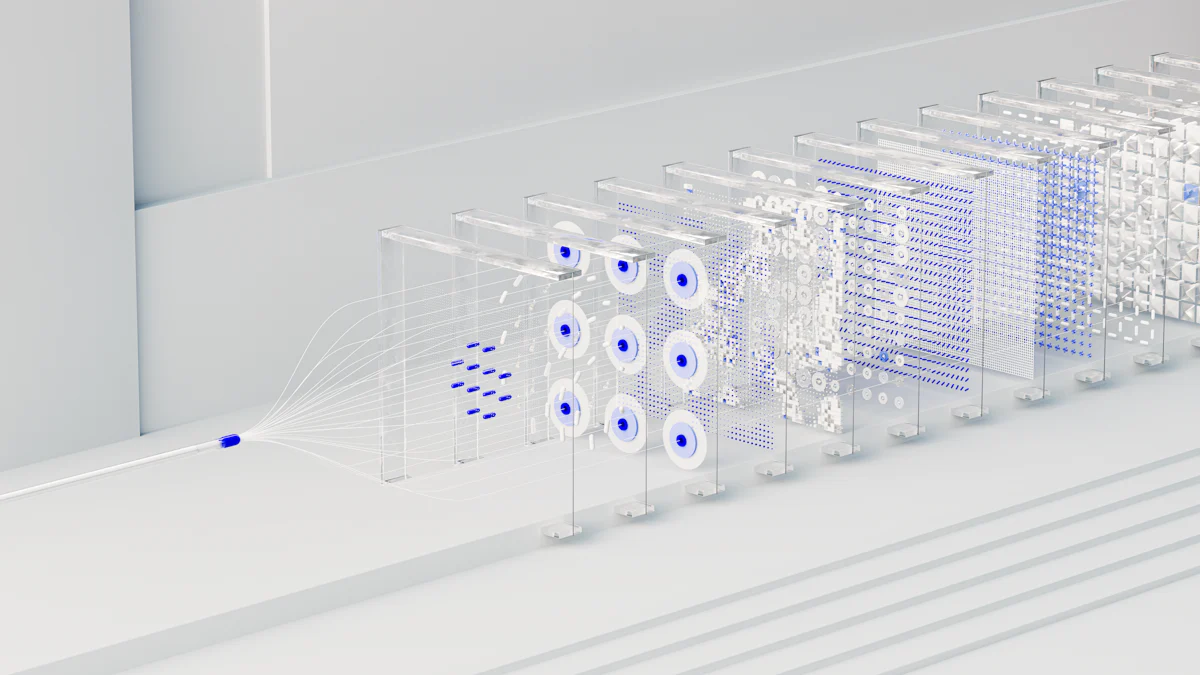

Quantization involves reducing the precision of weights and activations within neural network models (opens new window). By decreasing the number of bits (opens new window) required to represent these values, quantization aims to streamline model size while maintaining performance standards.

# Importance in machine learning

In the pursuit of efficient deployment, quantization emerges as a crucial technique. It enables memory and computational savings by optimizing the representation of numerical values (opens new window) with fewer bits. This reduction in complexity enhances deployment efficiency without sacrificing model accuracy.

# Types of Quantization

# Post-Training Quantization

Post-training quantization involves applying quantization techniques after model training. This method allows for flexibility in adjusting precision levels based on deployment requirements, ensuring optimal performance post-training.

# Quantization Aware Training

Quantization aware training simulates inference-time quantization during model training. By incorporating quantized representations early in the training process, practitioners can fine-tune models to operate effectively with reduced precision.

# Benefits of Quantization

# Memory and Computational Savings

Quantization significantly reduces memory usage and computational demands by representing model parameters with fewer bits. This streamlined approach enhances operational efficiency across various hardware platforms.

# Efficiency in Deployment

Efficient deployment is at the core of quantization benefits. By minimizing model size through reduced bit precision, neural network models become more accessible for deployment on resource-constrained devices, paving the way for widespread application integration.

# Techniques and Applications

# Quantization Techniques

Static vs Dynamic Quantization

When considering quantization techniques, the distinction between static and dynamic approaches is essential. Static quantization involves quantizing the model weights and activations before deployment, reducing their precision to optimize memory usage. On the other hand, dynamic quantization performs quantization during runtime, allowing for flexibility in adapting to varying computational requirements.

Quantization of Weights and Activations

In model quantization, the process of quantizing both weights and activations significantly impacts the model's size and efficiency. By reducing the bit precision of these components (opens new window), quantized models achieve a streamlined representation without compromising performance. This reduction in complexity enhances deployment on resource-constrained devices while maintaining accuracy levels.

# Applications in Deep Learning (opens new window)

# Image Processing (opens new window)

The application of quantization in deep learning extends to various domains, with image processing standing out as a prominent area of implementation. By leveraging reduced precision representations, deep learning models can efficiently process images with minimal computational overhead. This optimization enables faster inference times and improved performance in image-related tasks.

# Mobile and Embedded Devices

For deploying deep learning models on mobile and embedded devices, efficient models are crucial. Through model quantization, complex models can be transformed into lightweight versions suitable for execution on diverse hardware platforms. The reduced memory footprint (opens new window) and computational requirements make these models ideal for real-time applications on mobile devices.

# Case Studies

# Optimum Intel (opens new window) with AIMET (opens new window)

The collaboration between Optimum Intel and AIMET showcases the practical benefits of model quantization in optimizing neural network models for efficient deployment. By applying advanced techniques such as clustering, AIMET facilitates the creation of compressed models tailored for specific hardware configurations. This comprehensive approach ensures that deep learning models achieve peak performance while conserving valuable resources.

# Optimum Intel with PyTorch (opens new window)

In partnership with PyTorch, Optimum Intel explores the realm of model compression through quantization techniques tailored to PyTorch frameworks. By following a comprehensive guide to model quantization, practitioners can seamlessly reduce model sizes without compromising accuracy or computational efficiency. This collaboration highlights the importance of leveraging industry-leading tools to streamline the deployment of deep learning solutions.

# Real-World Impact

# Size Comparison after Quantization

When quantization is applied to neural network models, a noticeable reduction in model size becomes evident. For instance, the collaboration between Optimum Intel and AIMET exemplifies this impact through a comprehensive analysis of model compression post-quantization. By implementing advanced techniques like clustering, the team successfully optimized neural network models for efficient deployment on specific hardware configurations. This meticulous approach ensures that deep learning models achieve peak performance while conserving valuable resources.

In practical terms, the reduction in model size post-quantization can be substantial. Consider a scenario where the original model took 21.7 seconds to predict on an entire test set. After quantization, the same prediction task only required 4.4 seconds (opens new window) with the quantized model. This significant improvement in performance can be directly attributed to the reduced size of the model, showcasing the tangible benefits of model quantization in real-world applications.

# Analysis of Optimum Intel

The analysis conducted by Optimum Intel delves into the intricate details of how quantization impacts model size and computational efficiency. By meticulously examining various quantization techniques and their implications on neural network architectures, Optimum Intel offers invaluable insights into optimizing models for streamlined deployment. Through a data-driven approach and empirical evidence, Optimum Intel showcases how quantization enhances operational efficiency without compromising accuracy.

# Code Examples

To illustrate the practical implementation of quantization, code examples play a crucial role in demonstrating how complex neural network models can be transformed into lightweight versions suitable for diverse hardware platforms. By showcasing snippets of code that highlight the quantization process and its effects on model size reduction, practitioners gain a deeper understanding of how to leverage these techniques effectively in their own projects.

# Performance Metrics

Quantizing neural network models not only reduces their size but also influences various performance metrics crucial for real-world deployment scenarios.

# Accuracy vs Model Size

One key metric affected by quantization is accuracy. Different CNN models optimized with varying quantization techniques showcase distinct inference accuracies when processing medical images for tumor detection. For instance, VGG16's original accuracy stands at 87%, while GoogLeNet and ResNet achieve 88.5% and 77%, respectively, highlighting how different approaches to quantization impact model accuracy.

# Real-world Deployment Scenarios

In real-world settings, deploying efficiently compressed models is paramount for seamless integration across diverse hardware platforms. The reduced memory footprint and computational demands of quantized models enable faster inference times and improved performance on mobile devices or embedded systems where resource constraints are prevalent.

In reflecting on the transformative power (opens new window) of quantization (opens new window) in reducing model size, it becomes evident that this technique is pivotal for enhancing operational efficiency without compromising accuracy. Looking ahead, embracing quantization opens doors to streamlined deployment on resource-constrained devices and accelerated inference times. The future of machine learning lies in harnessing the potential of quantized models to optimize performance while conserving valuable resources. As the industry continues to evolve, prioritizing Quantization will be paramount for driving innovation and advancing the field towards unprecedented efficiency.