K Nearest Neighbor (KNN) (opens new window), a fundamental algorithm in machine learning, remains prevalent across academia and industry. KNN is a non-parametric, supervised learning (opens new window) classifier widely used for classification and regression tasks (opens new window). Its simplicity and interpretability make it a go-to choice for various applications like face recognition (opens new window), spam detection, and image recognition. Understanding KNN is crucial as it forms the foundation of many machine learning techniques.

# What is K Nearest Neighbor

In understanding K Nearest Neighbor (KNN), it is essential to delve into its core components. The Basic concept of KNN revolves around classifying data points based on their similarity to other data points. This algorithm, with its roots tracing back historically, has stood the test of time and remains a cornerstone in machine learning. Initially discovered years ago (opens new window), KNN has since evolved but retains its fundamental principles.

The non-parametric nature (opens new window) of KNN sets it apart from other algorithms. It does not make assumptions about the underlying data distribution, making it versatile and applicable across various domains. Moreover, being a form of Supervised learning, KNN requires labeled training data to make predictions accurately.

# Key Features

Non-parametric nature: Does not assume specific properties about the data distribution.

Supervised learning: Relies on labeled training examples for prediction accuracy.

# How the Algorithm Works

# Steps in KNN

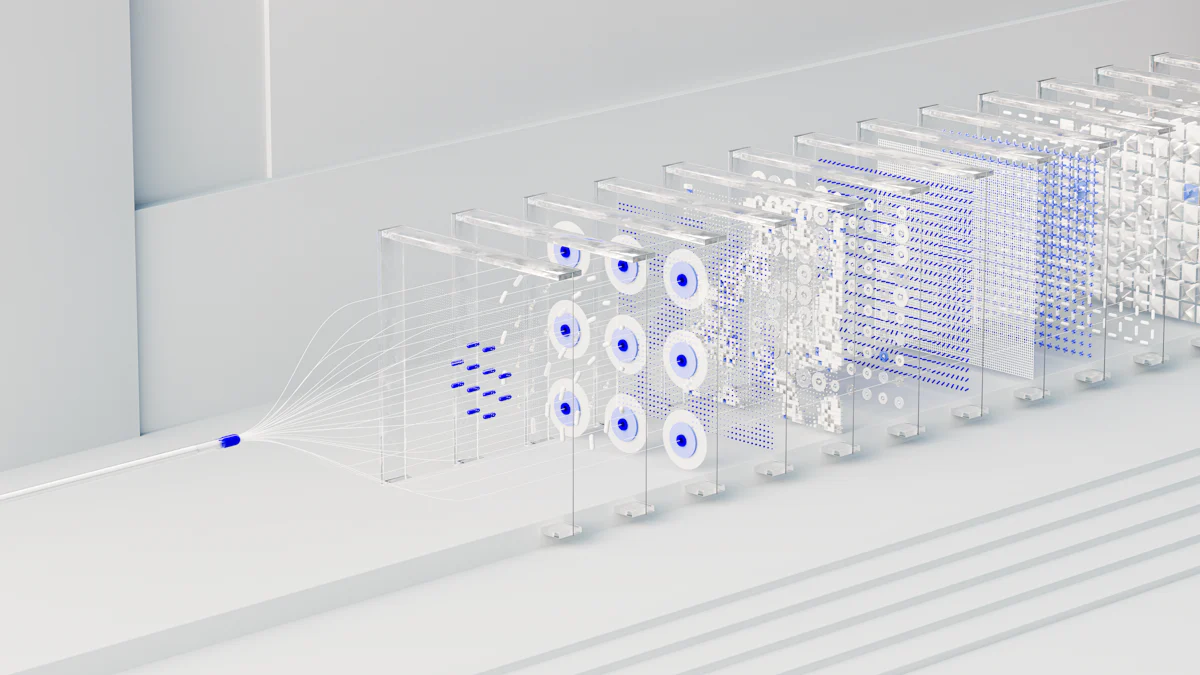

To comprehend how the K Nearest Neighbor (KNN) algorithm operates, it is essential to grasp the sequential processes involved.

# Data storage

The initial step in KNN is storing all available data points. This includes both the feature values and their corresponding class labels. By retaining this information, the algorithm can make informed decisions when classifying new data points.

# Distance calculation

Following data storage, KNN calculates the distance between the new data point and all existing data points. Common distance metrics used include Euclidean distance (opens new window) or Manhattan distance (opens new window). This calculation determines which existing data points are closest to the new point.

# Voting mechanism

Once distances are calculated, a voting mechanism is employed to determine the class of the new data point. In this process, each of the K nearest neighbors "votes" on the classification of the new point based on their own class labels. The majority class among these neighbors becomes the predicted class for the new data point.

# Choosing the Value of K

Selecting an appropriate value for K significantly impacts the accuracy and performance (opens new window) of a KNN model.

# Impact on accuracy

The choice of K directly influences model accuracy. Different values of K yield varying levels of accuracy, with smaller values potentially leading to overfitting (opens new window) and larger values risking underfitting (opens new window).

# Methods to determine K

Determining an optimal value for K involves balancing bias and variance (opens new window) in model predictions. It is crucial to experiment with different values, considering factors like dataset size and complexity, to find a value that maximizes predictive performance while minimizing computational costs.

# Applications and Future Directions

# Practical Applications

# Classification tasks

In classification tasks, K Nearest Neighbor (KNN) algorithm plays a crucial role in various real-world scenarios. For instance, in healthcare, KNN can assist in diagnosing diseases based on similar symptoms exhibited by patients. By analyzing the attributes of existing cases and comparing them with new data points, medical professionals can make informed decisions about potential illnesses. Moreover, in e-commerce platforms, KNN is utilized for recommendation systems to suggest products to customers based on their preferences and past interactions. This personalized approach enhances user experience and increases customer satisfaction.

# Regression tasks

KNN extends its utility beyond classification tasks into regression applications. One practical example is predicting housing prices based on features like location, size, and amenities. By examining the characteristics of similar properties and their corresponding values, real estate agents or property evaluators can estimate the market worth of a house accurately. Additionally, in financial sectors, KNN aids in forecasting stock prices (opens new window) by analyzing historical data trends and identifying patterns that influence market fluctuations. This predictive capability assists investors in making informed decisions regarding buying or selling stocks.

# Advantages and Disadvantages

# Strengths of KNN

The strengths of the K Nearest Neighbor (KNN) algorithm lie in its simplicity and interpretability. Unlike complex models that require extensive tuning, KNN is easy to implement and understand. Its non-parametric nature allows it to adapt well to different types of data distributions without making strong assumptions. Additionally, KNN excels in handling multi-class problems efficiently by considering the entire dataset during prediction.

# Limitations of KNN

Despite its advantages, KNN has certain limitations that need consideration. One drawback is its computational inefficiency when dealing with large datasets due to the need for calculating distances between all data points. Moreover, as an instance-based learning algorithm, KNN requires significant memory storage for storing training instances which can be impractical for massive datasets with high dimensionality.

# Future Directions

# Potential improvements

Future advancements in K Nearest Neighbor (KNN) algorithm focus on enhancing its scalability and efficiency for big data applications. Researchers are exploring techniques to optimize distance calculations and reduce computational complexity without compromising accuracy. Implementing parallel processing (opens new window) methods can potentially speed up the algorithm's execution time significantly.

# Emerging trends

An emerging trend in the application of KNN involves integrating it with other machine learning algorithms to create hybrid models (opens new window) that leverage the strengths of each technique. By combining KNN with algorithms like decision trees or neural networks, practitioners aim to develop more robust predictive models capable of handling diverse datasets effectively. This fusion approach opens up new possibilities for improving prediction accuracy across various domains while addressing the limitations inherent in individual algorithms.

Recap of KNN algorithm: K Nearest Neighbor (KNN) is a versatile machine learning algorithm utilized for classification and regression tasks. Its simplicity and interpretability make it (opens new window) a popular choice across various domains. By storing all available data and classifying new data points based on similarity, KNN simplifies the categorization process efficiently.

Summary of key points: The KNN algorithm's non-parametric nature allows it to adapt to diverse data distributions without assumptions. It excels in classification problems by leveraging the majority voting (opens new window) system among its nearest neighbors. Additionally, KNN's ability to calculate consistent distance measures between instances enhances its predictive power.

Future considerations and recommendations: Moving forward, exploring optimizations for scalability and efficiency in handling large datasets will be crucial for enhancing the algorithm's performance. Integrating KNN with other machine learning techniques can lead to hybrid models that offer improved accuracy and robustness in predictive tasks.