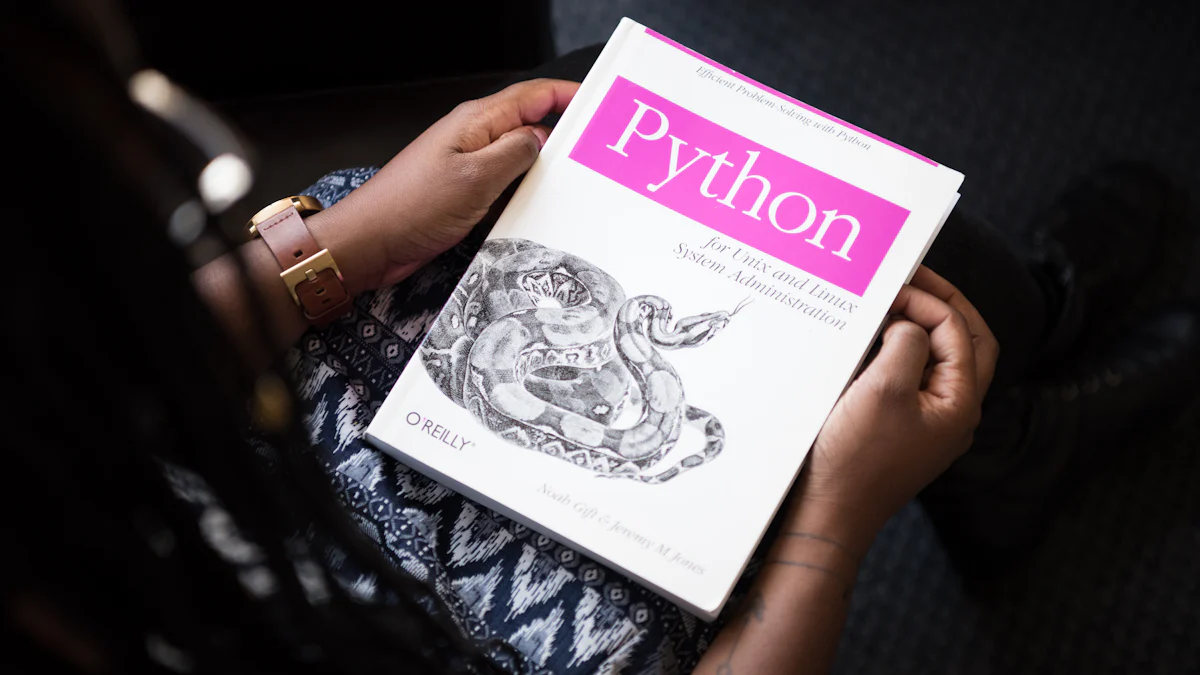

When delving into the realm of machine learning, understanding the significance of the nearest neighbor algorithm is paramount. In this blog, the focus lies on unraveling why Python (opens new window) stands out as the optimal choice for implementing this algorithm efficiently. By exploring Python's rich libraries, user-friendly syntax (opens new window), and robust community support, it becomes evident why Python reigns supreme in the world of machine learning. Let's embark on a journey through the intricacies of KNN and discover why Python serves as its perfect companion.

# Overview of KNN

# Understanding KNN

# What is KNN?

K-nearest neighbors (KNN) (opens new window) is a fundamental algorithm in machine learning that classifies data points based on their similarity to surrounding data. The algorithm assigns a class label to a data point based on the majority class of its nearest neighbors. This simple yet powerful approach makes KNN a popular choice for various classification tasks.

# How does KNN work?

In the realm of machine learning, K-nearest neighbors (KNN) operates on the principle of proximity. When presented with a new, unlabeled data point, KNN identifies the k closest labeled data points in the training set. By analyzing these neighbors, KNN determines the most prevalent class or value among them and assigns it to the new data point. This process enables KNN to make predictions based on the characteristics of neighboring instances.

# Applications of KNN

# Classification

K-nearest neighbors (KNN) finds extensive application in classification tasks across diverse domains (opens new window) such as industry, health, marketing, security, and more. Its ability to classify data based on similarity makes it particularly useful in scenarios where identifying patterns and grouping similar instances are crucial.

# Regression

Beyond classification, K-nearest neighbors (KNN) also serves as an effective tool for regression problems. By calculating the average or weighted average of the nearest neighbors' values, KNN can predict continuous outcomes. This flexibility allows KNN to handle regression tasks with ease and accuracy.

# Limitations of KNN

# Computational cost

One notable limitation of the K-nearest neighbors (KNN) algorithm is its computational cost during inference. As the dataset grows larger, the time required to find nearest neighbors increases significantly. Efficient techniques like KD-trees (opens new window) or Ball trees (opens new window) can mitigate this challenge by optimizing neighbor search operations.

# Sensitivity to irrelevant features

Another drawback of K-nearest neighbors (KNN) is its sensitivity to irrelevant features in the dataset. Including noisy or redundant attributes can impact the algorithm's performance by introducing unnecessary complexity. Feature selection and dimensionality reduction (opens new window) techniques play a vital role in addressing this limitation and improving model accuracy.

# Why Python is Ideal for KNN

# Rich Libraries and Frameworks

Python stands out as the optimal choice for implementing the K-nearest neighbors (KNN) algorithm due to its rich libraries and frameworks that streamline the machine learning process. Scikit-learn (opens new window), a prominent library in Python, offers a comprehensive suite of tools for data processing, model building, and evaluation. Its user-friendly interface simplifies complex tasks like feature extraction (opens new window), model selection, and hyperparameter tuning (opens new window). Leveraging NumPy (opens new window) and Pandas (opens new window) further enhances the efficiency of KNN implementation in Python.

# Ease of Use

The simplicity of Python's syntax makes it an ideal language for beginners and experts alike. With straightforward commands and logical structure, implementing the K-nearest neighbors (KNN) algorithm becomes intuitive. NumPy provides an efficient multidimensional array object (opens new window) for working with large datasets in Python. It enables seamless data manipulation, mathematical operations, and integration with other models. On the other hand, Pandas offers easy-to-use data structures (opens new window) and tools for loading, cleaning, transforming, and preparing structured datasets for modeling.

# Strong Community Support

Python's robust community support serves as a valuable resource for machine learning enthusiasts at all skill levels. Active forums dedicated to Python programming foster knowledge sharing, problem-solving, and collaboration among developers worldwide. The abundance of online tutorials, documentation, and open-source projects empowers individuals to explore new concepts, troubleshoot issues efficiently, and stay updated on the latest trends in machine learning.

# Practical Implementation in Python

# Setting Up the Environment

To commence the practical implementation of KNN in Python, the initial step involves setting up the environment. This process entails installing necessary libraries and loading and preprocessing data to ensure a seamless workflow.

# Installing Necessary Libraries

Before diving into creating a KNN model, it is essential to install key libraries that facilitate machine learning tasks in Python. By leveraging libraries such as NumPy and Scikit-learn, users can access a wide array of functions for data manipulation, model building, and evaluation. Installing these libraries lays a solid foundation for implementing the K-nearest neighbors algorithm effectively.

# Loading and Preprocessing Data

Data serves as the cornerstone of any machine learning endeavor, including K-nearest neighbors (KNN) implementation. Loading a well-prepared dataset is crucial for training and testing the KNN model accurately. Additionally, preprocessing data through steps like normalization, feature scaling, and handling missing values ensures that the dataset is optimized for model training. By preparing the data meticulously, users can enhance the performance and reliability of their KNN algorithm.

# Implementing KNN in Python

With the environment set up and data ready for processing, the next phase revolves around implementing the K-nearest neighbors algorithm in Python. This stage encompasses creating the KNN model from scratch, training it on the dataset, and evaluating its performance through testing.

# Creating the KNN Model

The first stride in implementing KNN involves creating a robust model that can classify data points based on their proximity to neighboring instances. By defining parameters such as the number of neighbors (k) and distance metrics like Euclidean distance (opens new window), users establish a foundation for accurate predictions within their machine learning model.

# Training and Testing the Model

Once the KNN model is created, it undergoes training using labeled data points to learn patterns within the dataset. Subsequently, testing becomes imperative to evaluate how well the model generalizes to unseen data. By splitting the dataset into training and testing sets, users can validate their KNN algorithm's accuracy and identify areas for optimization.

# Evaluating The Model

After training and testing phases are complete, evaluating the performance of the KNN model becomes paramount to gauge its effectiveness in real-world scenarios accurately.

# Measuring Accuracy

Measuring accuracy involves assessing how well the trained KNN classifier aligns with actual outcomes when presented with new data points. Calculating metrics like precision, recall, or F1 score provides insights into classification performance and aids in understanding where improvements may be necessary.

# Optimizing The Model

Optimizing an implemented machine learning algorithm like KNN entails fine-tuning parameters such as k value selection or exploring different distance metrics to enhance predictive capabilities further. Through iterative adjustments based on evaluation results, users can refine their models for optimal performance across various applications.

K-Nearest Neighbors (KNN) emerges as a versatile algorithm (opens new window), offering interpretability and adaptability in diverse scenarios. Its effectiveness, simplicity, and ability to predict various variables make it a valuable asset (opens new window) for both regression and classification tasks. Python, with its rich libraries like Scikit-learn and user-friendly syntax, enhances the implementation of KNN models. Looking ahead, the fusion of KNN's strengths with Python's capabilities hints at exciting future developments in machine learning applications.