# Full-Text Search

NOTE

This guide is only applicable to the DB version 1.5.0 or higher.

Traditional database textual search capabilities with operators like LIKE and ILIKE, have been fundamental for years. However, they fall short in addressing the needs of modern information retrieval systems due to several limitations:

- Lack of Linguistic Support: Traditional methods struggle with language nuances, failing to recognize word derivations (e.g., "satisfies" vs. "satisfy"), which can lead to incomplete or inaccurate search results. Although it's possible to manually search for variations using

OR, this approach is cumbersome and error-prone. - Absence of Result Ranking: Without the ability to rank search results, sifting through thousands of matches becomes inefficient.

- Performance Issues: The lack of index support means every document must be processed for each search, leading to slow performance.

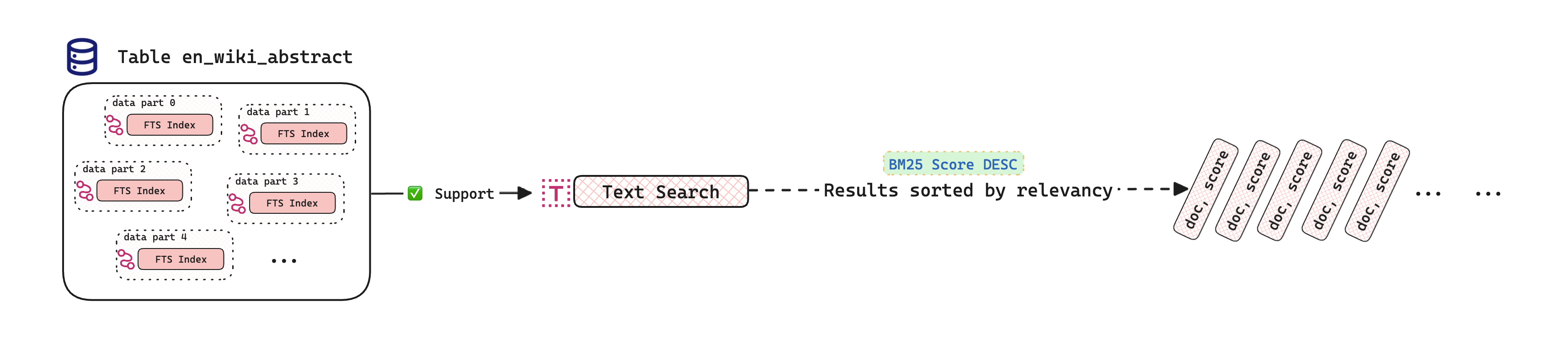

To overcome these challenges, MyScale introduces a new index type called FTS Index (Full-Text Search Index), powered by the Tantivy (opens new window) library—a high-performance, open-source full-text search engine library. The FTS Index supports the BM25 indexing algorithm, enabling efficient and relevant search results. This integration enhances MyScale's full-text search functionality and improves overall performance.

# Tutorial Overview

This tutorial guides you through conducting three types of search experiments with FTS indexes:

- FTS Index Creation: Learn how to configure FTS indexes for various use cases.

- Using the

TextSearch()Function: Discover how to search and rank texts with BM25 scores. - Natural Language Queries: Enhance search logic with natural language connectives like

ANDandOR. - Using the

full_text_searchTable Function: Discover how to perform full-text search on a FTS index with multiple text columns. - String Search Functions in MyScale: Explore how MyScale leverages FST indexes for improved string search capabilities.

Before starting, ensure you have a MyScale cluster set up. For setup instructions, refer to our Quickstart Guide (opens new window).

# Dataset Overview

We'll use the Wikipedia abstract dataset (opens new window), containing over 5.6 million records, available in the Parquet format. This dataset will be directly imported into MyScale from S3, eliminating the need for local downloads.

The table below briefly describes the content of this dataset.

| id | body | title | url |

|---|---|---|---|

| ... | ... | ... | ... |

| 77 | Jake Rodkin is an American .... and Puzzle Agent. | Jake Rodkin | https://en.wikipedia.org/wiki/Jake_Rodkin (opens new window) |

| 78 | Friedlandpreis der Heimkehrer is ... of Germany. | Friedlandpreis der Heimkehrer | https://en.wikipedia.org/wiki/Friedlandpreis_der_Heimkehrer (opens new window) |

| ... | ... | ... | ... |

# Creating and Populating the Table

Create the en_wiki_abstract table in MyScale using the following SQL command:

CREATE TABLE default.en_wiki_abstract(

`id` UInt64,

`body` String,

`title` String,

`url` String,

)

ENGINE = MergeTree

ORDER BY id;

Then, import the dataset from S3. Please wait patiently for the data import to complete.

INSERT INTO default.en_wiki_abstract SELECT * FROM s3('https://myscale-datasets.s3.ap-southeast-1.amazonaws.com/wiki_abstract_5m.parquet','Parquet');

Verify that the table contains 5,648,453 rows of data.

SELECT count(*) FROM default.en_wiki_abstract;

Output:

| count() |

|---|

| 5648453 |

To improve search performance, we can optimize the table by consolidating it into a single data part. This step is optional.

OPTIMIZE TABLE default.en_wiki_abstract FINAL;

Run the following SQL statement to check if the data in this table has been compressed into one part.

SELECT COUNT(*) FROM system.parts

WHERE table = 'en_wiki_abstract' AND active = 1;

If the data is compressed to 1, this SQL statement will return the following result set:

| count() |

|---|

| 1 |

# Understanding FTS Index Parameters

MyScale supports various tokenizers, each suited for different scenarios. When creating an FTS index, you can customize it with a JSON configuration for the tokenizer.

TIP

Please provide a valid JSON string in the parameter of FTS index.

-- Example 1: Create an index on a single text column using the default tokenizer without specifying additional parameters.

ALTER TABLE [table_name] ADD INDEX [index_name] [column_name]

TYPE fts;

-- Example 2: Create an index on a single text column with the default tokenizer explicitly defined.

ALTER TABLE [table_name] ADD INDEX [index_name] [column_name]

TYPE fts('{"<column_name>":{"tokenizer":{"type":"default"}}}');

-- Example 3: Create an index on a single text column using the ICU tokenizer.

ALTER TABLE [table_name] ADD INDEX [index_name] [column_name]

TYPE fts('{"<column_name>":{"tokenizer":{"type":"icu"}}}');

-- Example 4: Create an index using the stem tokenizer while applying stop words for filtering.

ALTER TABLE [table_name] ADD INDEX [index_name] [column_name]

TYPE fts('{"<column_name>":{"tokenizer":{"type":"stem", "stop_word_filters":["english"]}}}');

-- Example 5: Create an index on multiple text columns; each column will use the default tokenizer by default.

ALTER TABLE [table_name] ADD INDEX [index_name] ([column_name_0], [column_name_1], ...)

TYPE fts;

-- Example 6: Specify different tokenizer types for each text column in the index.

ALTER TABLE [table_name] ADD INDEX [index_name] ([column_name_0], [column_name_1], ...)

TYPE fts('{"<column_name_0>":{"tokenizer":{"type":"icu"}}, "<column_name_1>":{"tokenizer":{"type":"whitespace"}}}');

The table below lists the types of tokenizers supported by the FTS index.

| Type of Tokenizer | Description |

|---|---|

default | Default tokenizer, splits text on non-alphabetic characters, case-insensitive |

raw | Raw tokenizer, performs no tokenization on the text, treats the entire text as a single token |

simple | Simple tokenizer, splits text on non-alphabetic characters |

stem | Stemming tokenizer, supports multiple languages, converts words to their stem form, can ignore word tenses |

whitespace | Whitespace tokenizer, splits text on whitespace characters (spaces, tabs, newlines, etc.) |

ngram | N-gram tokenizer, splits text based on specified n-gram length |

chinese | Chinese tokenizer, performs tokenization on Chinese text, internally uses the jieba tokenization library |

icu | The ICU tokenizer excels at handling multilingual text. If you're struggling with tokenizing text in multiple languages, the ICU tokenizer is your ultimate solution. |

# Common Tokenizer Parameters

The default and raw tokenizers only support the store_doc parameter, while the other tokenizers support following common parameters.

| Parameter Name | Type | Default Value | Description |

|---|---|---|---|

store_doc | boolean | false | Whether to store the original document, currently not recommended to enable |

length_limit | number | 40 | Maximum length of tokenized tokens |

case_sensitive | boolean | false | Whether tokenization is case-sensitive |

Most tokenizers support additional parameters in addition to the common ones mentioned above.

# simple、stem、whitespace、icu tokenizers

| Parameter Name | Type | Default Value | Description |

|---|---|---|---|

stop_word_filters | string[] | [] | Stop word filters, specifies which languages to discard stop words during tokenization, all valid languages include ["danish", "dutch", "english", "finnish", "french", "german", "hungarian", "italian", "norwegian", "portuguese", "russian", "spanish", "swedish"] |

# stem、icu tokenizer

| Parameter Name | Type | Default Value | Description |

|---|---|---|---|

stem_languages | string[] | [] | Languages used for stemming, for English, it can ignore word tenses during tokenization, supported languages for the stem tokenizer are ["arabic", "danish", "dutch", "english", "finnish", "french", "german", "greek", "hungarian", "italian", "norwegian", "portuguese", "romanian", "russian", "spanish", "swedish", "tamil", "turkish"] |

# ngram tokenizer

| Parameter Name | Type | Default Value | Description |

|---|---|---|---|

min_gram | number | 2 | Minimum number of grams |

max_gram | number | 3 | Maximum number of grams |

prefix_only | boolean | false | Whether to only extract n-grams from the prefix of words |

# chinese tokenizer

For the Chinese tokenizer, cang-jie (opens new window) is used as the underlying implementation.

| Parameter Name | Type | Default Value | Description |

|---|---|---|---|

jieba | string | "default" | "default" means using the jieba dictionary, "empty" means not using the built-in jieba dictionary for tokenization. Valid values are "default" or "empty" |

mode | string | "search" | Chinese tokenization mode, valid values are "all", "default", "search", or "unicode", the differences between each mode can be referred to cang-jie/options.rs (opens new window) |

hmm | boolean | false | Whether to enable HMM |

The above provides a detailed description of the parameters for MyScale FTS index tokenizers. When creating an FTS index, you can select the tokenizer type that best suits your needs and configure its parameters to enhance search performance and results.

# icu tokenizer

| Parameter Name | Type | Default Value | Description |

|---|---|---|---|

mode | string | "word" | Valid values are "word", "sentence", "line", "grapheme", "word". The "word" mode performs best in multilingual contexts. |

For more information on the features of the ICU tokenizer, please refer to icu_segmenter (opens new window)

# Creating an FTS Index

Customize your FTS (Full-Text Search) index with the appropriate tokenizer to optimize search performance. For instance, employing the stem tokenizer with English stop words can enhance search accuracy by focusing on the root forms of words.

Single-column and multi-column indexes play a crucial role in text search capabilities. Single-column indexes facilitate efficient searches within a specific field, while multi-column indexes allow for comprehensive searches across multiple fields, enhancing overall search performance. To perform text searches on a single-column index, you can use the TextSearch function. For multi-column indexes, the full_text_search function enables effective searches across all indexed columns.

# Creating a Single-Column Text Index

To create a single-column text index, use the following SQL statement:

ALTER TABLE default.en_wiki_abstract

ADD INDEX body_idx body

TYPE fts('{"body":{"tokenizer":{"type":"stem", "stop_word_filters":["english"]}}}');

In this example, we create an FTS index on the body column. Note that FTS indexes (similar to skip indexes in ClickHouse) are only applied to newly inserted data. Therefore, simply adding the index will not affect existing data. To index already existing records, execute the following statement:

ALTER TABLE default.en_wiki_abstract MATERIALIZE INDEX body_idx;

# Creating a Multi-Column Text Index

You can also create an index on multiple text columns to improve search capabilities across various fields. For example:

ALTER TABLE default.en_wiki_abstract

ADD INDEX multi_col_idx (body, title)

TYPE fts('{"body":{"tokenizer":{"type":"stem", "stop_word_filters":["english"]}}, "title":{"tokenizer":{"type":"default"}}}');

In this case, we define an FTS index on both the body and title columns, using different tokenizers for each. Of course, you will also need to execute the MATERIALIZE statement, just like when creating a single-column index:

ALTER TABLE default.en_wiki_abstract MATERIALIZE INDEX multi_col_idx;

# Searching with Document BM25 Ranking

The following example shows how to use the TextSearch() function. The example returns the top 10 most relevant documents to "non-profit institute in Washington". The metric for measuring relevance is the BM25 score returned by the TextSearch() function - the higher the score, the more relevant it is.

SELECT

id,

title,

body,

TextSearch(body, 'non-profit institute in Washington') AS score

FROM default.en_wiki_abstract

ORDER BY score DESC

LIMIT 5;

From the results, we can see that the text in each row of the body column is related to the search phrase "non-profit institute in Washington".

| id | title | body | score |

|---|---|---|---|

| 3400768 | Drug Strategies | Drug Strategies is a non-profit research institute located in Washington D.C. | 24.457561 |

| 872513 | Earth Policy Institute | Earth Policy Institute was an independent non-profit environmental organization based in Washington, D.C. | 22.730673 |

| 895248 | Arab American Institute | Founded in 1985, the Arab American Institute is a non-profit membership organization based in Washington D.C. | 21.955559 |

| 1950599 | Environmental Law Institute | The Environmental Law Institute (ELI) is a non-profit, non-partisan organization, headquartered in Washington, D.C. | 21.231567 |

| 2351478 | Public Knowledge | Public Knowledge is a non-profit Washington, D.C. | 20.742344 |

From DB version v1.8 or higher, the TextSearch() function can search on a text column using a multi-columns FTS index. The usage is same as the above for single column FTS index.

# Leveraging Natural Language Queries

MyScale employs the Tantivy library for full-text search (FTS) indexing, enabling support for complex natural language queries. For further information, refer to Tantivy's documentation (opens new window).

Here is an example of a multi-condition combination query using AND and OR. We want to search for Anthropological topics related to New York City, Africa, or Paris. The SQL query would be:

SELECT

id,

title,

body,

TextSearch(body, 'Anthropological AND ("New York City" OR African OR Paris)') AS score

FROM default.en_wiki_abstract

ORDER BY score DESC

LIMIT 5;

The search results show that each line of text contains the word "Anthropological" (case-insensitive), satisfying the condition on the left side of the AND statement. At the same time, each result contains at least one of "New York City", "African" or "Paris", meeting the matching condition on the right side of the AND statement.

| id | title | body | score |

|---|---|---|---|

| 2826913 | African Anthropologist | African Anthropologist is the journal of the Pan African Anthropological Association (PAAA). | 20.131313 |

| 3905943 | Tunnel People | Tunnel People is an anthropological-journalistic account describing an underground homeless community in New York City. It is written by war photographer and anthropologist Teun Voeten and was published by PM Press in 2010. | 13.759308 |

| 3790627 | Les Accords de Bella | Les Accords de Bella is a 2007 anthropological documentary film directed by David Constantin. It was selected by the African Film Festival of Cordoba - FCAT. | 12.769518 |

| 4488199 | Naparay | Naparay, in African anthropological study, is a non-linear conception of human life held by some West African peoples such as the Yoruba. Similar to reincarnation, naparay holds that lives are cyclic and attributes of previous lives may carry over to a new life. | 11.682068 |

| 1725559 | Gradhiva | Gradhiva is an anthropological and museological journal, founded in 1986 by the poet and social scientist Michel Leiris and by the anthropologist Jean Jamin. It is since 2005 published by the Musée du Quai Branly in Paris. | 11.135916 |

# TextSearch Parameters Explain

NOTE

Parameters are available only in DB version v1.6.3 or higher.

Below is a detailed description of the TextSearch() parameters:

| Parameter | Default Value | Candidate Values | Description |

|---|---|---|---|

enable_nlq | true | true, false | This parameter decides whether to enable the natural language query parsing. When set to true, FTS will interpret user input as a natural language query. When set to false, FTS will use standard terms query to parse user input. |

operator | OR | OR, AND | This parameter specifies the logical operator to use for combining each query terms (tokenized by tokenizer). Selecting OR will return results that match any of the conditions, while selecting AND will return results that match all terms. |

NOTE

TextSearch for distributed tables are available only in DB version v1.7.0 or higher. For TextSearch on distributed tables, building the corresponding FTS index on the local tables is sufficient.

Below is a detailed description of the TextSearch() query settings:

| Settings | Default Value | Candidate Values | Description |

|---|---|---|---|

dfs_query_then_fetch | false | true, false | For distributed tables, this setting decides whether to first perform a distributed query to collect statistics from all shards, followed by a precise full-text search (FTS) based on the collected statistics. |

# Example Usage

SELECT

id,

title,

body,

TextSearch('enable_nlq=true', 'operator=OR')(body, 'mammoth AND Europe') AS score

FROM default.wiki_abstract_text

ORDER BY score DESC

LIMIT 5

Based on the results, each row contains both "mammoth" and "Europe" which aligns with the logic of enabling natural language query.

Please note that there are only 2 search results. Although our SQL query set the limit to 5, only 2 entries in the table meet the criteria.

| id | title | body | score |

|---|---|---|---|

| 3171491 | Leymus racemosus | Leymus racemosus is a species of perennial wild rye known by the common name mammoth wild rye. It is native to southeastern and eastern Europe, Middle Asia, Caucasus, Siberia, China, Mongolia, New Zealand, and parts of North America. | 10.067189 |

| 2719784 | Venus of Hohle Fels | The Venus of Hohle Fels (also known as the Venus of Schelklingen; in German variously ) is an Upper Paleolithic Venus figurine made of mammoth ivory that was unearthed in 2008 in Hohle Fels, a cave near Schelklingen, Germany. It is dated to between 35,000 and 40,000 years ago, belonging to the early Aurignacian, at the very beginning of the Upper Paleolithic, which is associated with the earliest presence of Cro-Magnon in Europe. | 6.9371195 |

SELECT

id,

title,

body,

TextSearch('enable_nlq=false', 'operator=OR')(body, 'Atlantic AND Europe') AS score

FROM default.wiki_abstract_text

ORDER BY score DESC

LIMIT 5

After disabling natural language query, the results no longer guarantee the simultaneous appearance of both "Atlantic" and "Europe." Since the default value of operator is OR, any row containing "Atlantic", "AND", or "Europe" will be included in the search results.

| id | title | body | score |

|---|---|---|---|

| 3046233 | And | And or AND may refer to: | 13.748591 |

| 5050203 | A N D (Tricot album) | And}} | 13.047318 |

| 357499 | Andromeda I | And 1}} | 12.335348 |

| 678064 | Omicron Andromedae | And 1}} | 12.335348 |

| 3716928 | Platycheirus ramsaerensis | Platycheirus ramsaerensis is a species of hoverfly. It is found along the parts of northern Europe that face the Atlantic. | 11.937536 |

# Table Function full_text_search

Note

Table function full_text_search() are available only in DB version v1.7.0 or higher.

The table function full_text_search() is utilized to perform text searches on a FTS index with multiple text columns in MyScale. The basic syntax for the full_text_search() table function is as follows:

full_text_search(table_name, index_name, query [,with_score] [,enable_nlq] [,operator])

table_namerefers to the table containing the FTS index.index_namerefers to the FTS index containing multiple text columns to be searched.queryrefers to the search string that conforms to Tantivy query language.with_scorerefers to whether to return the bm25 score column (default to 0).enable_nlqrefers to whether to enable natural langurage query parsing (default to 1).operatorrefers to the logical operator to use for combining each query terms (tokenized by tokenizer) (default to 'OR').

The following example shows how to use the full_text_search() function. The example returns the top 5 most relevant documents that the body column contains "non-profit institute in Washington" or the title column contains "Institute". The metric for measuring relevance is the BM25 score returned by the full_text_search() function - the higher the score, the more relevant it is.

SELECT

id,

title,

body,

bm25_score

FROM full_text_search('default.en_wiki_abstract', 'multi_col_idx', 'body:non-profit institute in Washington or title:Institute', 1)

ORDER BY bm25_score DESC

LIMIT 5;

From the results, we can see that either the text in each row of the body column is related to the search phrase "non-profit institute in Washington" or the text of the title column is related to the search phrase "Institute".

| id | title | body | bm25_score |

|---|---|---|---|

| 849009 | Washington Institute | Washington Institute may mean | 38.110603 |

| 872513 | Earth Policy Institute | Earth Policy Institute was an independent non-profit environmental organization based in Washington, D.C. | 35.545998 |

| 895248 | Arab American Institute | Founded in 1985, the Arab American Institute is a non-profit membership organization based in Washington D.C. | 34.77088 |

| 1730029 | Washington Cancer Institute | The Washington Cancer Institute (WCI) is Washington, D.C. | 34.445385 |

| 1950599 | Environmental Law Institute | The Environmental Law Institute (ELI) is a non-profit, non-partisan organization, headquartered in Washington, D.C. | 34.046894 |

# Accelerating Built-In String Functions

FTS indexes can also be used to accelerate ClickHouse's built-in string functions, including equals, notEquals, like, notLike, hasToken, hasTokenOrNull, in, notIn, startsWith, endsWith, has, mapContains, and multiSearchAny.

To control whether the FTS index is enabled when executing these string functions, a query setting named enable_fts_index_for_string_functions is provided. The default value is 0, meaning that the FTS index is not used when executing these built-in ClickHouse functions. Users can manually set it to 1 to enable FTS. For more information about how to use query settings, please refer to the ClickHouse Query-level Settings (opens new window).

# Why is the Default Value of enable_fts_index_for_string_functions Set to 0?

Efficiency Considerations:

When the searched string appears relatively infrequently in the entire table, using FTS can achieve significant acceleration. However, if the searched string appears very frequently in the table, such as common words like What, his, etc., using FTS indexes in this scenario can actually slow down the query speed of regular functions because most of the granules will be read.

Search Result Accuracy:

FTS indexes tokenize the text in the table, and the behavior of tokenization varies depending on the type of tokenizer. For example, the raw tokenizer treats the original text as a single token, while the whitespace tokenizer splits the original text into multiple tokens based on spaces. Below are the tokenization results of these two tokenizers for "WhiteHouse is very beautiful.":

tokenizer(raw):token<"WhiteHouse is very beautiful.">tokenizer(whitespace):token<"WhiteHouse">,token<"is">,token<"very">,token<"beautiful">

The behavior of the tokenizer can affect the accuracy of the string matching functions. When using the raw tokenizer, executing a like query %WhiteHouse%beautiful%, FTS converts the like query into a RegexQuery in Tantivy and searches all tokens, and it is clear that we can correctly match token<"WhiteHouse is very beautiful.">.

However, when using the whitespace tokenizer, FTS converts the like query %WhiteHouse%beautiful% into a regex match, and finds that it cannot match any of the existing tokens, then the text segment cannot be marked as a hit, resulting in inaccurate search results.

Thus, for string functions that are converted to RegexQuery including like, notLike, startsWith, and endsWith, when enable_fts_index_for_string_functions is set to 1, only the raw tokenizer can provide correct results.

# Example Usage

Example 1: Perform a Simple Keyword Search

Search for the single word 'Tsinghua'.

SELECT count(*)

FROM default.en_wiki_abstract

WHERE hasToken(body, 'Tsinghua')

SETTINGS enable_fts_index_for_string_functions=1;

Output:

| count() |

|---|

| 81 |

Example 2: Perform a Multi-Keyword Search

Find articles that contain "Eiffel Tower" but not "Paris".

SELECT count(*)

FROM default.en_wiki_abstract

WHERE (NOT hasToken(body, 'Paris')) AND multiSearchAny(body, ['Eiffel', 'Tower'])

SETTINGS enable_fts_index_for_string_functions=1;

Output:

| count() |

|---|

| 2828 |

Example 3: Perform a Complex Conditional Combination Query

Combine different names, places, disciplines, and other terms.

SELECT count(*)

FROM default.en_wiki_abstract

WHERE (NOT multiSearchAny(body, ['Montessori', 'postulated', 'Rooney'])) AND (hasToken(body, 'Patsy') OR hasToken(body, 'Anthropological'))

SETTINGS enable_fts_index_for_string_functions=1;

Output:

| count() |

|---|

| 204 |

# Conclusion

This guide has walked you through leveraging MyScale for advanced text search capabilities, from setting up FTS indexes to executing natural language queries. By applying these techniques, you can efficiently manage and search through unstructured text data, showcasing MyScale's robust text processing capabilities.