# Why Vector Embeddings (opens new window) Matter in Python

# Understanding the Basics of Vector Embeddings

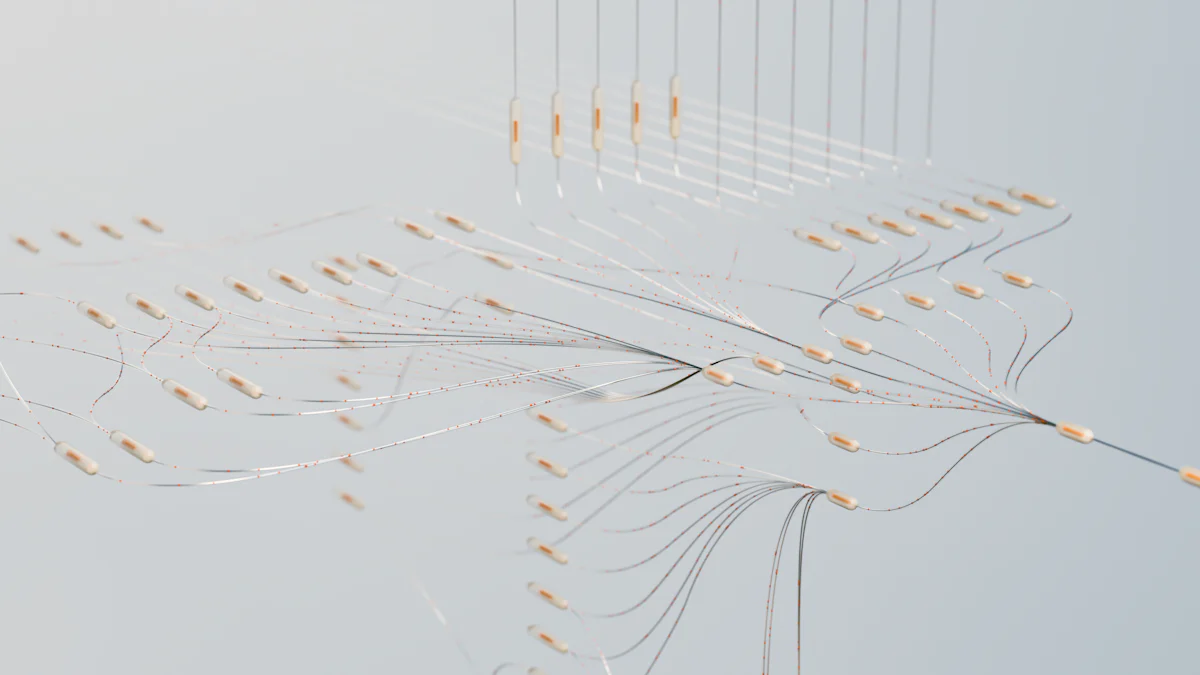

Vector embeddings, a fundamental concept in machine learning (opens new window) and data representation, play a pivotal role in transforming complex data into numerical vectors (opens new window). Python serves as a prominent platform for implementing these techniques efficiently. But what exactly are vector embeddings? They are numerical arrays that represent various characteristics of an object or entity, enabling similarity calculations and clustering tasks. The utilization of vector embeddings is crucial for discerning patterns in data (opens new window) and executing tasks such as sentiment analysis, language translation, and recommendation systems.

# The Importance of Vector Embeddings in Machine Learning

In the realm of machine learning, vector embeddings simplify the handling of intricate datasets by converting them into high-dimensional vectors. This transformation not only simplifies complex data but also enhances data analysis processes significantly. By leveraging algorithms like Word2Vec (opens new window), GloVe (opens new window), and FastText (opens new window), vector embeddings enable machines to understand relationships within the data and execute tasks with precision.

# Getting Started with Vector Embeddings in Python

Now that we understand the significance of vector embeddings in machine learning, let's delve into how to kickstart your journey with these powerful techniques in Python.

# Setting Up Your Python Environment

# Installing Necessary Libraries

To begin working with vector embeddings in Python, it's essential to install the required libraries such as Gensim (opens new window), SpaCy (opens new window), or TensorFlow (opens new window). These libraries provide a robust foundation for implementing various embedding models like Word2Vec, GloVe, and FastText.

# Preparing Your Dataset

Before creating your first vector embedding, ensure your dataset is well-prepared. Cleaning and preprocessing the data are crucial steps to eliminate noise and irrelevant information that could impact the quality of your embeddings. Remember, the quality of your dataset directly influences the effectiveness of your embeddings.

# Creating Your First Vector Embedding

# Choosing the Right Model

When it comes to selecting an embedding model, consider factors like the nature of your data and the specific task you aim to accomplish. Different models such as Word2Vec, GloVe, and FastText offer unique advantages (opens new window) based on their training methodologies (opens new window) and capabilities. For instance, Word2Vec excels in capturing semantic relationships (opens new window) between words, while GloVe focuses on global word co-occurrences.

# Training and Testing Your Embeddings

Once you've chosen a model, it's time to train and test your embeddings using your prepared dataset. Training involves feeding your data into the chosen model to learn the underlying patterns (opens new window) and relationships within the dataset. Testing ensures that the embeddings accurately represent the characteristics of your data and can be effectively used for tasks like sentiment analysis or text classification.

In this section, we have covered setting up your Python environment by installing necessary libraries and preparing your dataset. We also discussed choosing the right model for creating vector embeddings and highlighted the importance of training and testing these embeddings before applying them to real-world applications.

# Practical Applications of Vector Embeddings

After mastering the creation of vector embeddings in Python, it's essential to explore their practical applications across various domains. Let's delve into how these powerful techniques can revolutionize tasks such as semantic search (opens new window), text analysis, and natural language processing (opens new window).

# Semantic Search and Text Analysis

In the realm of semantic search, vector embeddings play a pivotal role in enhancing search engine results by understanding the contextual meaning behind queries. By leveraging semantic similarity calculations facilitated by embeddings, search engines can provide more accurate and relevant results to users. Additionally, in text analysis, vector embeddings enable the analysis of social media sentiments with precision. By capturing the underlying sentiment behind textual data, businesses can gain valuable insights into customer opinions and trends.

# Enhancing Natural Language Processing Tasks

Vector embeddings are instrumental in advancing natural language processing (NLP) tasks, particularly in developing chatbots and virtual assistants. These intelligent systems rely on embeddings to comprehend user queries effectively and generate appropriate responses. By measuring semantic similarity using vector representations, chatbots can engage in meaningful conversations with users, enhancing user experience significantly. Furthermore, vector embeddings are integral to language translation services by facilitating accurate translations based on semantic relationships between words.

Case Studies:

Product Embeddings for E-commerce Sites: Demonstrating the practical application of vector embeddings in recommendation systems allows e-commerce platforms to predict user preferences based on (opens new window) semantic similarity.

Measuring Similarity in Recommendation Systems: Highlighting the importance of vector embeddings in recommendation systems by facilitating similarity calculations and providing personalized recommendations across various (opens new window) domains.

# Wrapping Up

# Key Takeaways

As we conclude our exploration of vector embeddings in Python, it's essential to reflect on the power these techniques hold in transforming data representation and enhancing machine learning tasks.

The Power of Vector Embeddings

Vector embeddings serve as a cornerstone in simplifying complex datasets by converting them into numerical vectors. Recent developments highlight their effectiveness in addressing the high dimensionality and sparsity (opens new window) of biomedical graphs, making them a valuable asset in various domains like natural language processing, image recognition, and audio analysis. By transforming raw data into a format (opens new window) that machine learning algorithms can efficiently work with, vector embeddings pave the way for innovative applications and insights across diverse fields.

Next Steps in Your Python Journey

After mastering the fundamentals of vector embeddings, your Python journey can further expand by delving into advanced topics like graph embedding methods for analyzing large corpora of mental health research articles. These methods not only aid in identifying new findings but also track research progress and pinpoint potential areas for future exploration. Additionally, exploring online resources such as books and courses can deepen your understanding of Python and its integration with machine learning techniques.

# Further Resources and Learning

# Books and Online Courses

"Python Machine Learning" by Sebastian Raschka

"Deep Learning" by Ian Goodfellow

Coursera: Offers various courses on machine learning and deep learning.

# Joining Python and Machine Learning Communities

Engaging with communities like Kaggle (opens new window), GitHub (opens new window), or Stack Overflow (opens new window) provides opportunities to collaborate with experts, share insights, and stay updated on the latest trends in Python and machine learning. By actively participating in these communities, you can enhance your skills, seek guidance on challenging problems, and contribute to the collective knowledge pool.

In this final section, we've highlighted the transformative power of vector embeddings, outlined potential avenues for advancing your Python journey, and provided resources to continue your learning path effectively. Embrace the possibilities that vector embeddings offer and embark on a rewarding journey towards mastering Python's capabilities in machine learning.